SDK Usage for Spark Jobs

IOMETE's Python SDK provides a simple and convenient way to interact with our platform using Python code. With our SDK, you can easily integrate our platform's functionality into your Python projects and automate various tasks.

This document provides examples of how to use the iomete-sdk Python module to interact with the IOMETE platform.

The examples demonstrate various operations such as creating, updating, and deleting jobs, as well as submitting job runs.

If you have any questions or feedback, please do not hesitate to reach out to our support team.

Installation

To follow these examples, make sure you have the following dependency installed:

pip install iomete-sdk

Prerequisites

Also, you should have a valid IOMETE API token and workspace ID. For this example, we are using environment variables to store these values.

In the Resources section below, you can find a link to the documentation on how to create an API token.

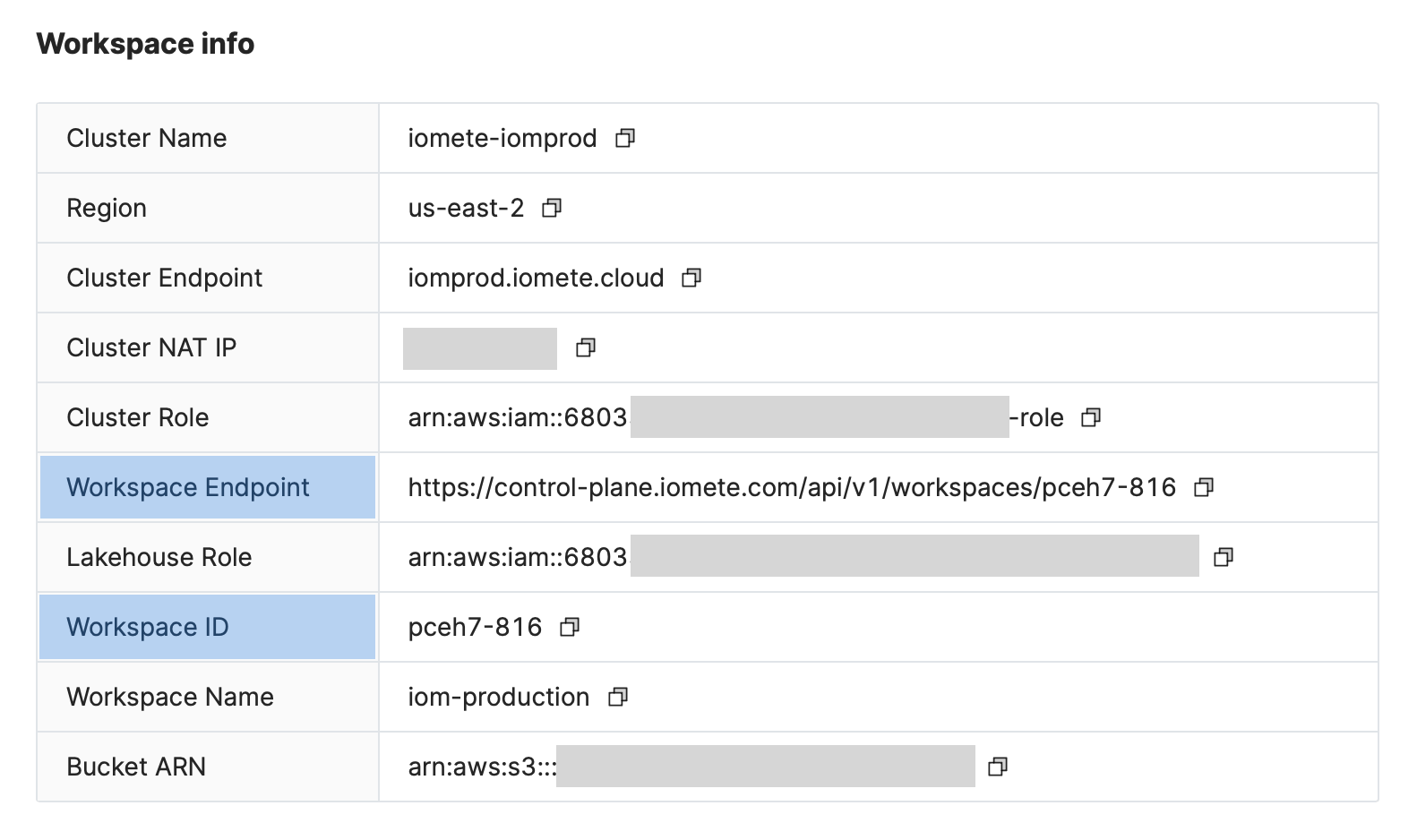

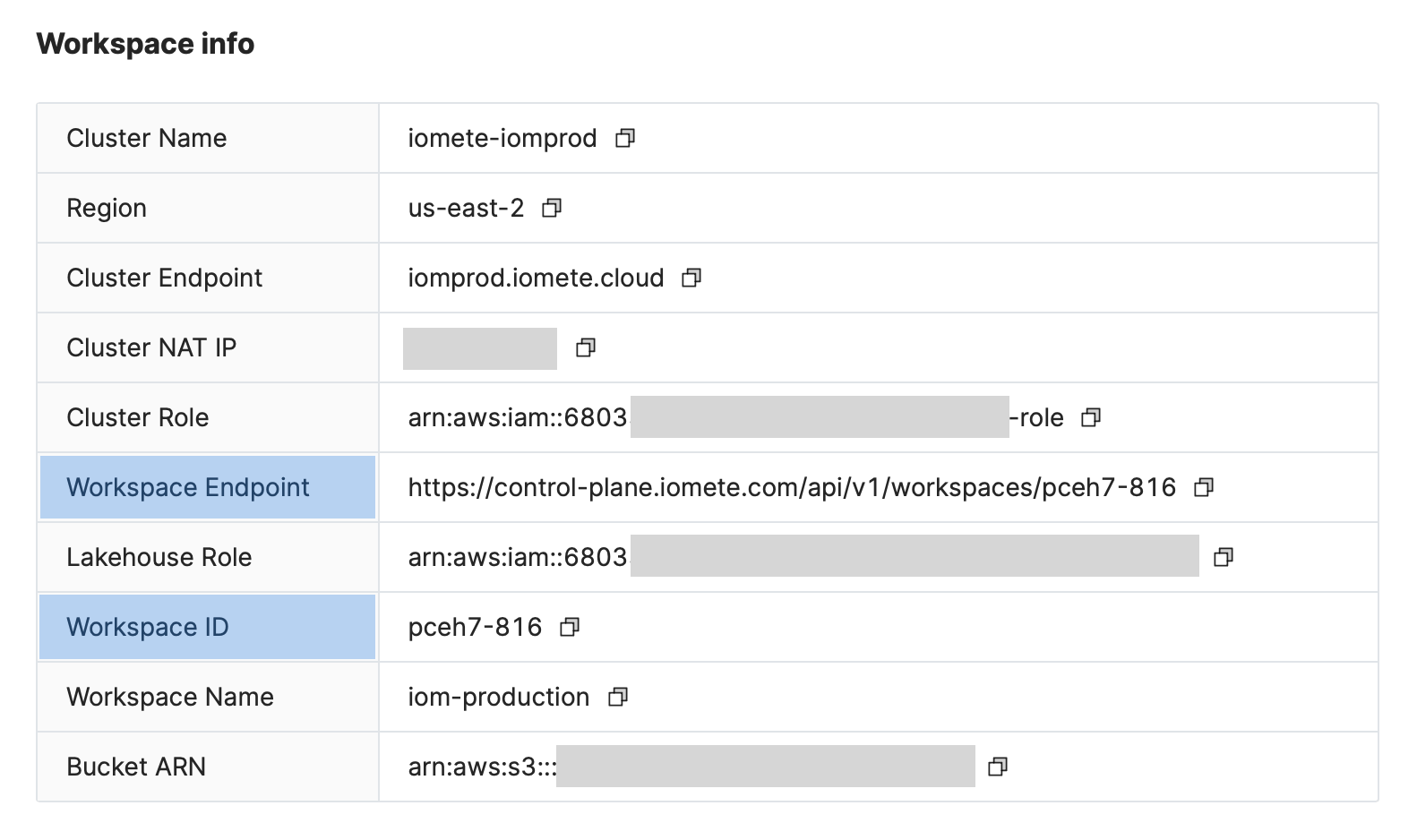

Workspace ID and Endpoint (for cURL examples) can be found in the Workspace Settings page of the IOMETE platform.

SDK Usage Examples

Initialization

First, import the required libraries and create a SparkJobApiClient instance to interact with the Iomete platform:

import os

import uuid

from iomete_sdk import SparkJobApiClient

TEST_TOKEN = os.environ.get("TEST_TOKEN")

WORKSPACE_ID = "pceh7-816"

job_client = SparkJobApiClient(

workspace_id=WORKSPACE_ID,

api_key=TEST_TOKEN,

)

Creating a Job

Here's an example of how to create a new job:

- Python

- cURL

job_payload = {

"name": "test-job",

"template": {

"sparkVersion": "3.2.1",

"mainApplicationFile": "local:///opt/spark/examples/jars/spark-examples_2.12-3.2.1-iomete.jar",

"mainClass": "org.apache.spark.examples.SparkPi",

"arguments": \["10"]

}

}

job_create_response = job_client.create_job(payload=job_payload)

print(job_create_response)

curl --location --request POST 'https://control-plane.iomete.com/api/v1/workspaces/pceh7-816/jobs' \

--header 'Authorization: Bearer TEST_TOKEN' \

--header 'Content-Type: application/json' \

--data-raw '{

"name": "test-job",

"template": {

"sparkVersion": "3.2.1",

"mainApplicationFile": "local:///opt/spark/examples/jars/spark-examples_2.12-3.2.1-iomete.jar",

"mainClass": "org.apache.spark.examples.SparkPi",

"arguments": ["10"]

}

}'

This will create a new job with a name, a specific Spark version, main application file, main class, and arguments.

All our API's for spark job works with job_id and job_name. You can use either of them in the request URL to interact with the job.

For example, both

.../api/v1/workspaces/pceh7-816/jobs/{job_id} and

.../api/v1/workspaces/pceh7-816/jobs/{job_name}

will work fine. For simplicity we will use job_name = test-job in the examples.

Updating a Job

To update a job, you can use the update_job method. Here's an example of how to update a job to add a schedule:

- Python

- cURL

cron_schedule = "0 0 */1 * *"

update_payload = job_create_response.copy()

update_payload["schedule"] = cron_schedule

job_update_response = job_client.update_job(job_id=job_create_response["id"], payload=update_payload)

print(job_update_response)

curl --location --request PUT 'https://control-plane.iomete.com/api/v1/workspaces/pceh7-816/jobs/test-job' \

--header 'Authorization: Bearer TEST_TOKEN' \

--header 'Content-Type: application/json' \

--data-raw '{

"name": "test-job",

"template": {

"sparkVersion": "3.2.1",

"mainApplicationFile": "local:///opt/spark/examples/jars/spark-examples_2.12-3.2.1-iomete.jar",

"mainClass": "org.apache.spark.examples.SparkPi",

"arguments": ["10"]

},

"schedule": "0 0 */1 * *"

}'

This will update the job created earlier with a schedule that runs it once every day.

Deleting a Job

To delete a job, use the delete_job_by_id method:

- Python

- cURL

job_client.delete_job_by_id(job_id=job_create_response["id"])

curl --location --request DELETE 'https://control-plane.iomete.com/api/v1/workspaces/pceh7-816/jobs/test-job' \

--header 'Authorization: Bearer TEST_TOKEN'

This will delete the job created earlier using its ID.

Running Job

To submit a job run, use the submit_job_run method. This will submit a new run for the job created earlier:

- Python

- cURL

run_response = job_client.submit_job_run(job_id=job_create_response["id"], payload={})

print(job_run_response)

curl --location --request POST 'https://control-plane.iomete.com/api/v1/workspaces/pceh7-816/jobs/test-job/runs' \

--header 'Authorization: Bearer TEST_TOKEN' \

--header 'Content-Type: application/json' \

--data-raw '{}'

If you need to override configuration, for example arguments, you can pass them in the payload.

- Python

- cURL

run_response = job_client.submit_job_run(job_id=job_create_response["id"], payload={

"arguments": ["arg1", "arg2"]

})

print(job_run_response)

curl --location --request POST 'https://control-plane.iomete.com/api/v1/workspaces/pceh7-816/jobs/test-job/runs' \

--header 'Authorization: Bearer TEST_TOKEN' \

--header 'Content-Type: application/json' \

--data-raw '{ "arguments": ["arg1", "arg2"] }'

Getting Job Runs

To get the runs for a specific job, use the get_job_runs method:

- Python

- cURL

job_runs = job_client.get_job_runs(job_id=job_create_response["id"])

print(job_runs)

curl --location --request GET 'https://control-plane.iomete.com/api/v1/workspaces/pceh7-816/jobs/test-job/runs' \

--header 'Authorization: Bearer TEST_TOKEN'

This will return a list of runs for the job.

Cancelling a Job Run

To cancel a job run, use the cancel_job_run method:

- Python

- cURL

job_client.cancel_job_run(job_id=job_create_response["id"], run_id=job_run_response["id"])

curl --location --request POST 'https://control-plane.iomete.com/api/v1/workspaces/pceh7-816/jobs/test-job/runs/{run_id}/cancel' \

--header 'Authorization: Bearer TEST_TOKEN'

Note: Replace {run_id} with the actual run ID.

This will cancel the job run submitted earlier.