Getting Started with Spark Jobs

This guide aims to help you get familiar with getting started with writing your first Spark Job and deploying in the IOMETE platform.

In this guide, we will use a PySpark sample job but, you can use any other language like Scala, Java or other supported languages.

Quickstart

IOMETE provides a PySpark quickstart template for AWS or PySpark quickstart template for GCP for you to get started with your first Spark Job. You can use this template to get started with your first Spark Job. Please follow the instructions in the README file to get started.

Sample Job

The template already contains a sample job that reads a CSV file from a S3 bucket, run some transformations and writes the output to an Iceberg Table.

Apache Iceberg is a new open table format for Apache Spark that improves on the existing table formats to provide ACID transactions, scalable metadata handling, and a unified specification for both streaming and batch data.

How can I use this template?

This template is meant to be used as a starting point for your own jobs. You can use it as follows:

- Clone this repository

- Modify the code and tests to fit your needs

- Build the Docker image and push it to your Docker registry

- Create a Spark Job in the IOMETE Control Plane

- Run the Spark Job

- Modify the code and tests as needed

- Go to step 3

If you are just starting with PySpark at IOMETE, you can just explore the sample code without modifying it. It will help you understand process of creating a Spark Job and running it.

Project Structure

The project is composed of the following folders/files:

infra/: contains requirements and Dockerfile filesrequirements-dev.txt: contains the list of python packages to install for developmentrequirements.txt: contains the list of python packages to install for production. This requirements file is used to build the Docker imageDockerfile: contains the Dockerfile to build the spark job image

spark-conf/: contains the spark configuration files for development environmentspark-defaults.conf: contains the spark configurationlog4j.properties: contains the log4j configuration for the PySpark job. This file is used to configure the logging level of the job

test_data/: contains the test data for the job unit/integration testsjob.py: contains the spark job code. Template comes with a sample code that reads a csv file from S3 and writes the data to a table in the Lakehouse. Feel free to modify the code to fit your needs.test_job.py: contains the spark job tests. Template comes with a sample test that reads the test data fromtest_data/and asserts the output of the job. Feel free to modify the tests to fit your needs.Makefile: contains the commands to run the job and tests

How to run the job

First, create a virtual environment and install the dependencies:

virtualenv .env

source .env/bin/activate

# make sure you have python version 3.7.0 or higher

make install-dev-requirements

Also, set the SPARK_CONF_DIR environment variable to point to the spark_conf folder. This is needed to load the spark configuration files for local development:

export SPARK_CONF_DIR=./spark_conf

Then, you can run the job:

python job.py

How to run the tests

Make sure you have installed the dependencies, and exported the SPARK_CONF_DIR environment variable as described in the previous section.

To run the tests, you can use the pytest command:

pytest

Deployment

Build Docker Image

In the Makefile, modify docker_image and docker_tag variables to match your Docker image name and tag.

For example, if you push your image to AWS ECR, your docker image name will be something like 123456789012.dkr.ecr.us-east-1.amazonaws.com/my-image.

Then, run the following command to build the Docker image:

make docker-push

Once the docker is built and pushed to your Docker registry, you can create a Spark Job in the IOMETE.

Creating a Spark Job

There are two ways to create a Spark Job in the IOMETE:

- Using the IOMETE Control Plane UI

- Using the IOMETE Control Plane API

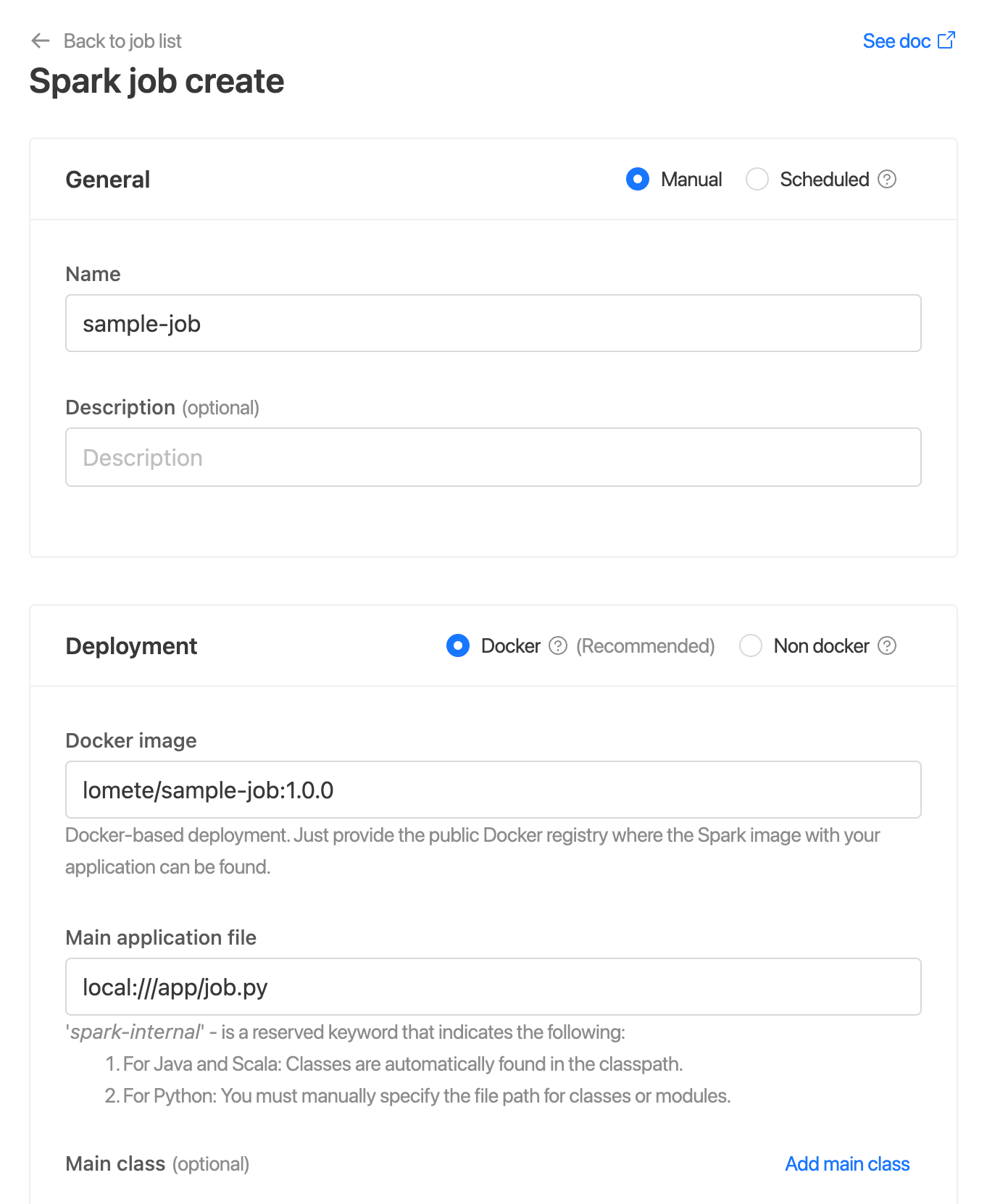

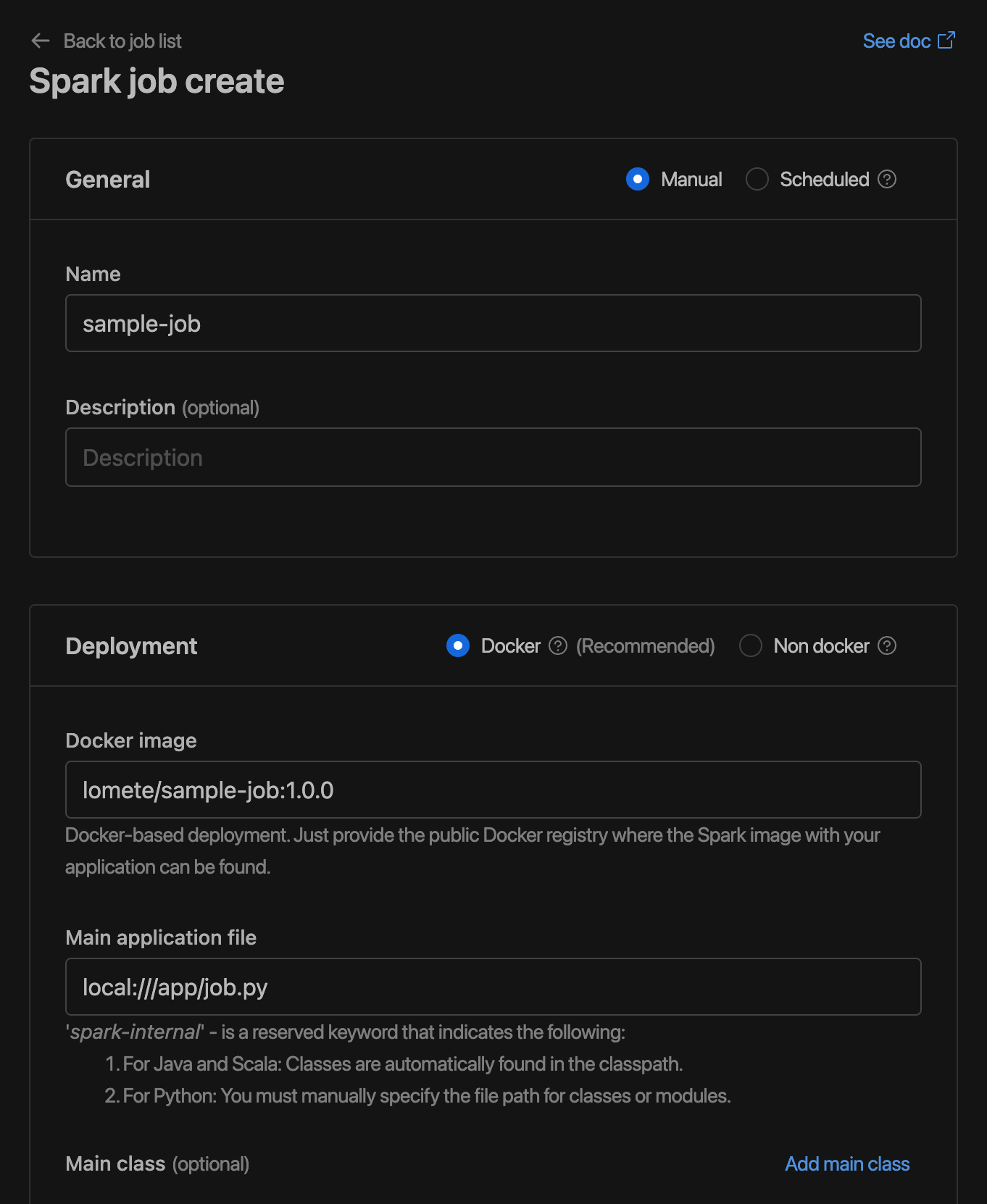

Using the IOMETE Control Plane UI

- Go to page

- Click on

- Provide the following information:

Name:sample-jobDocker image:iomete/sample-job:1.0.0Main application file:local:///app/job.py

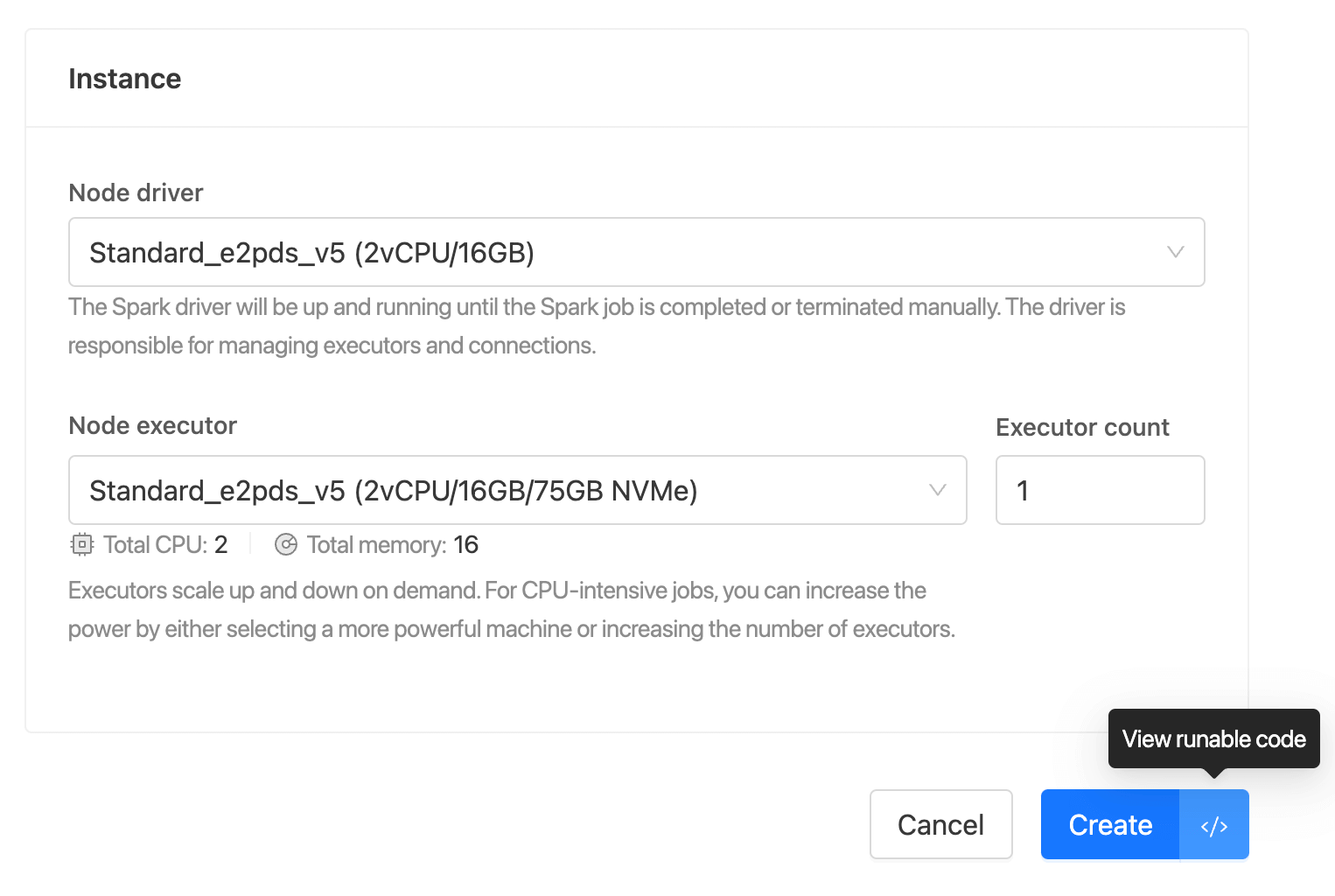

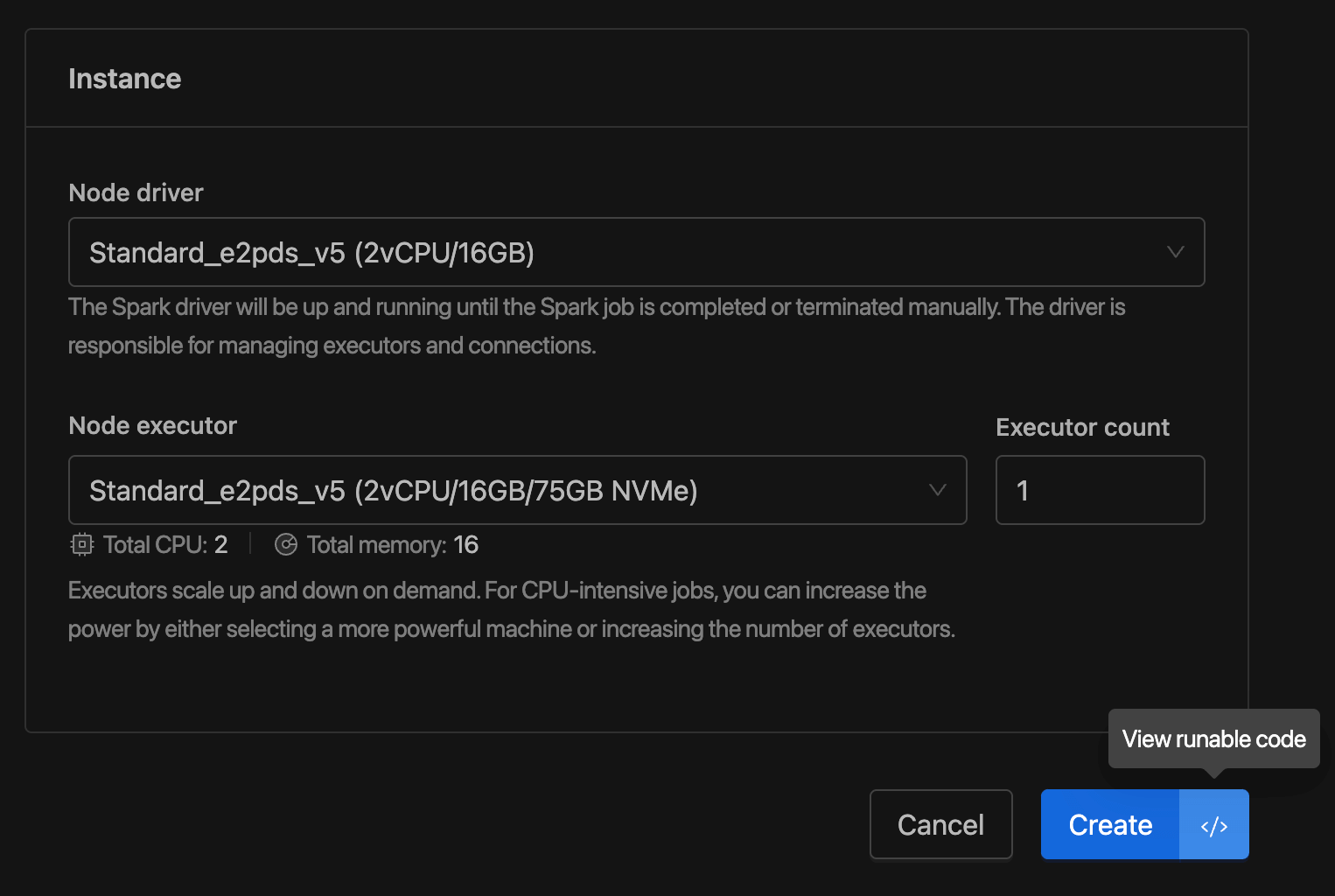

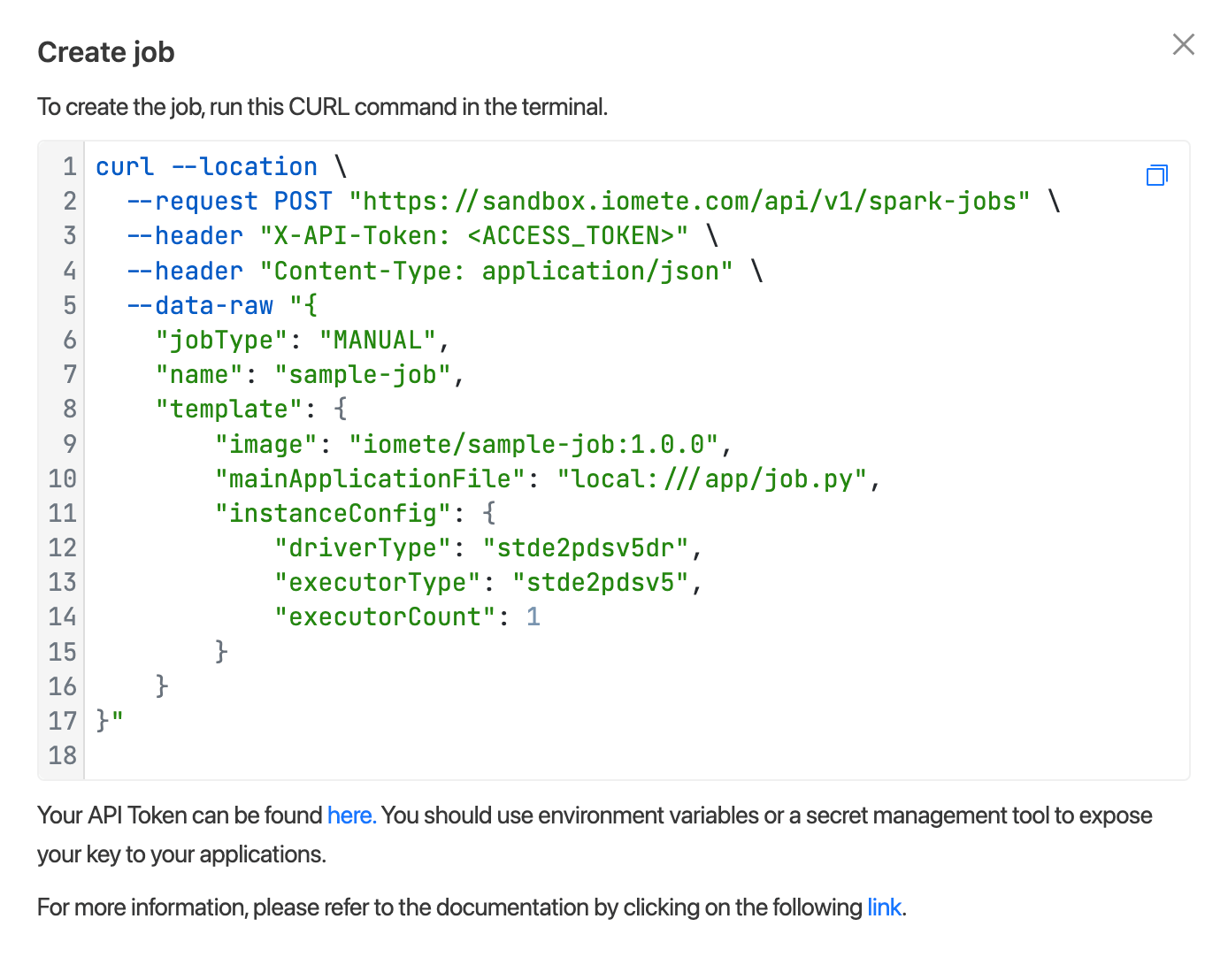

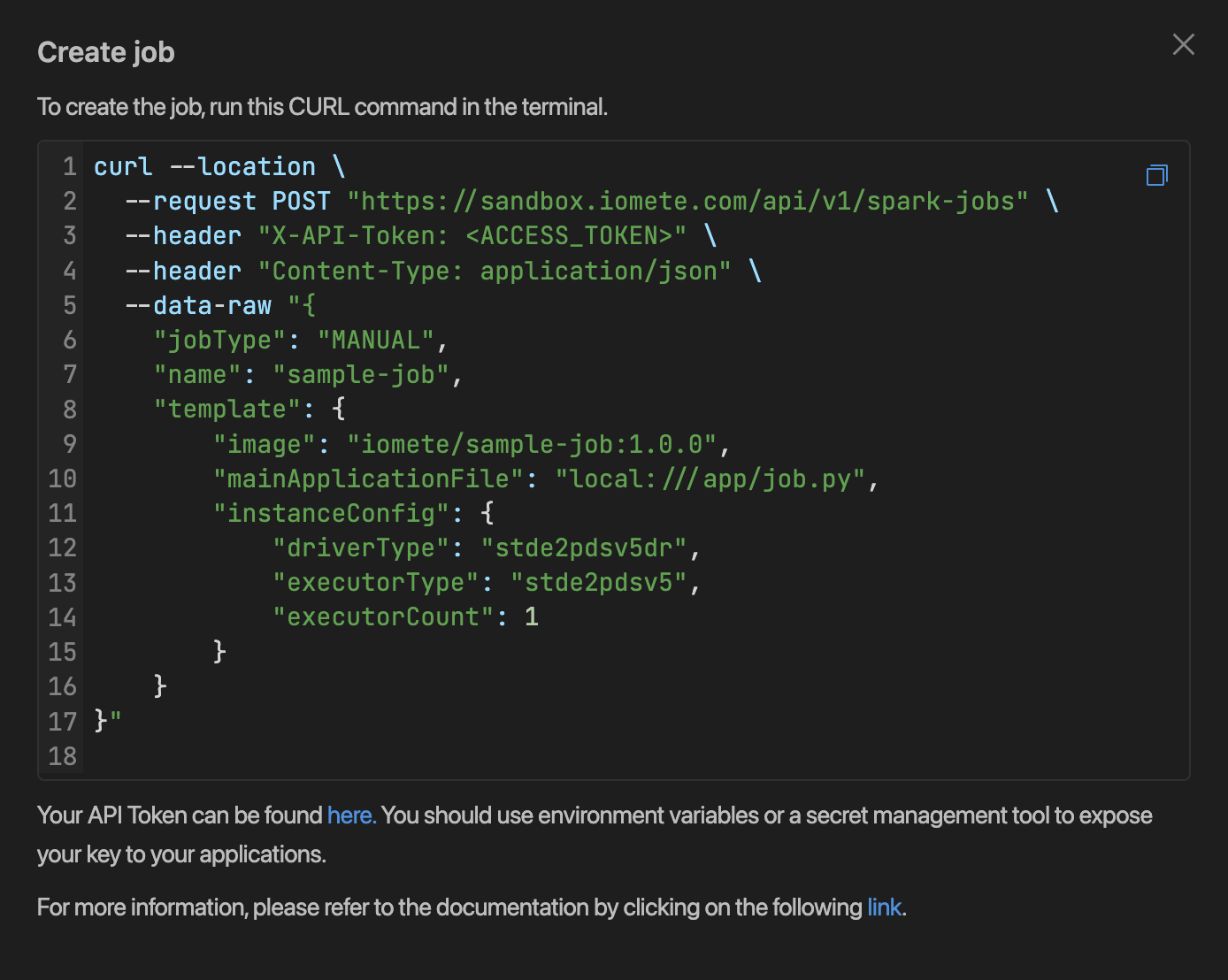

Using the IOMETE Control Plane API

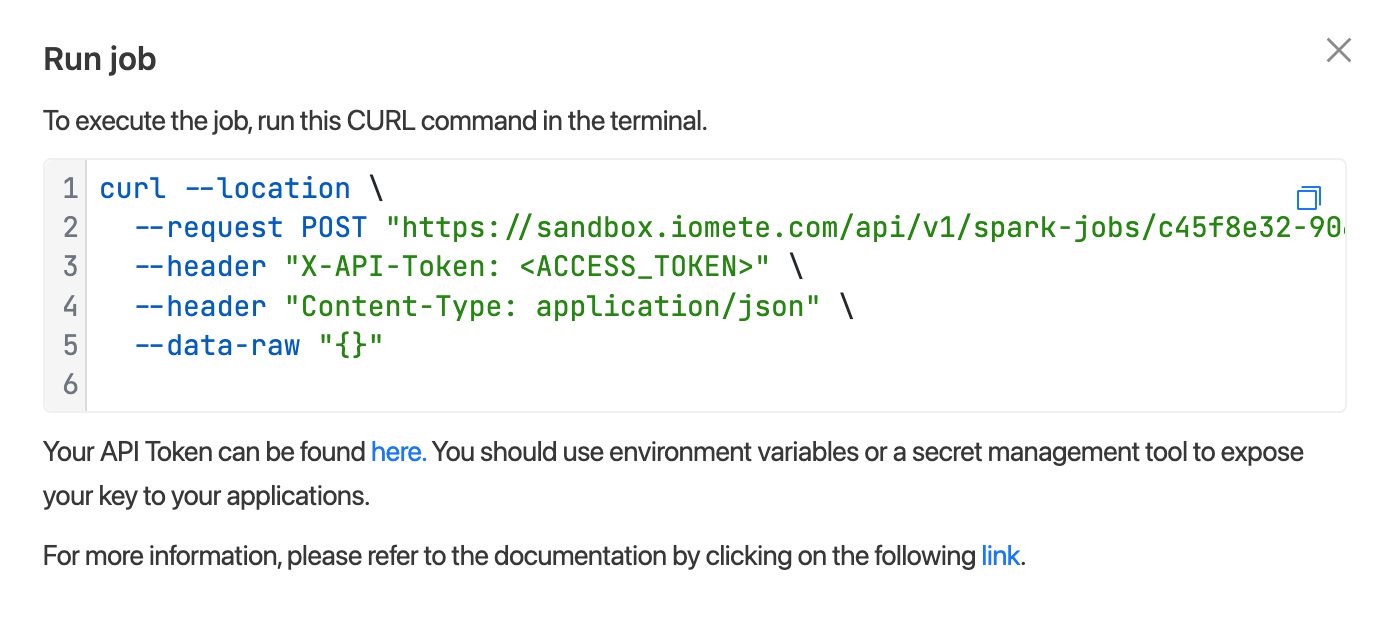

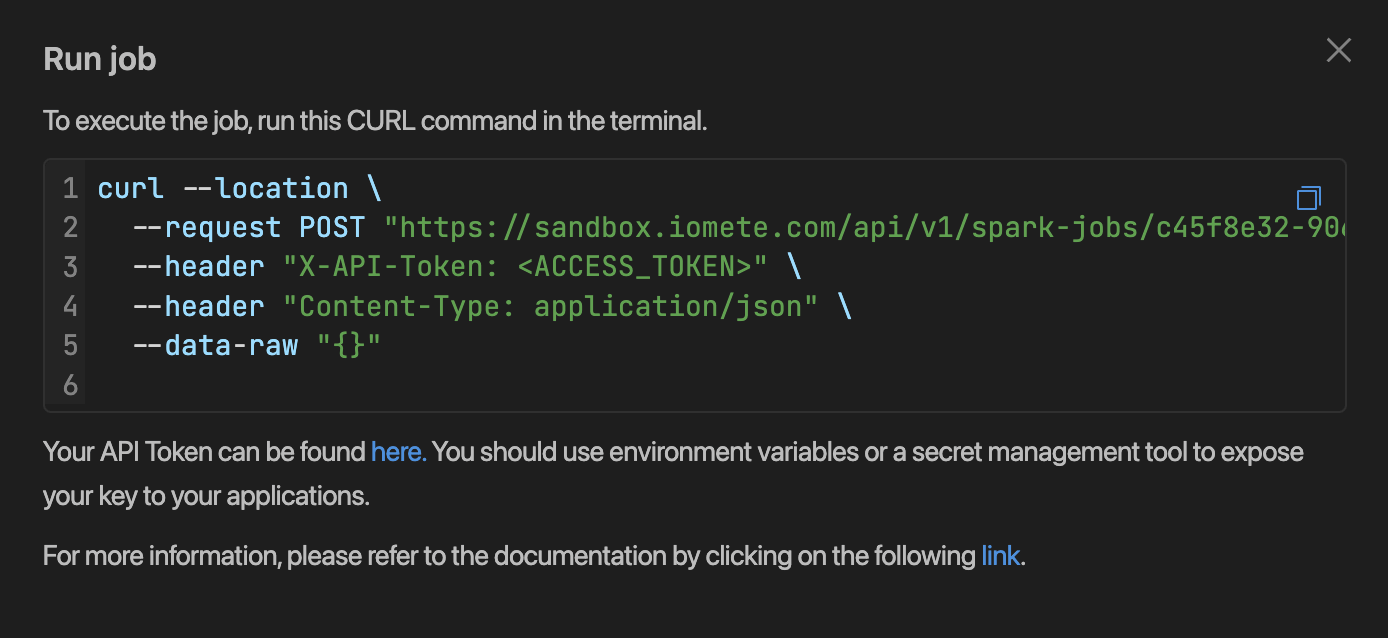

You can create a Spark job using the API. After entering the inputs, go to the bottom and click the button next to the button. You will see a CURL command.

To create an access token, go to the Settings menu and switch to the Access Tokens tab.

Run Spark Job

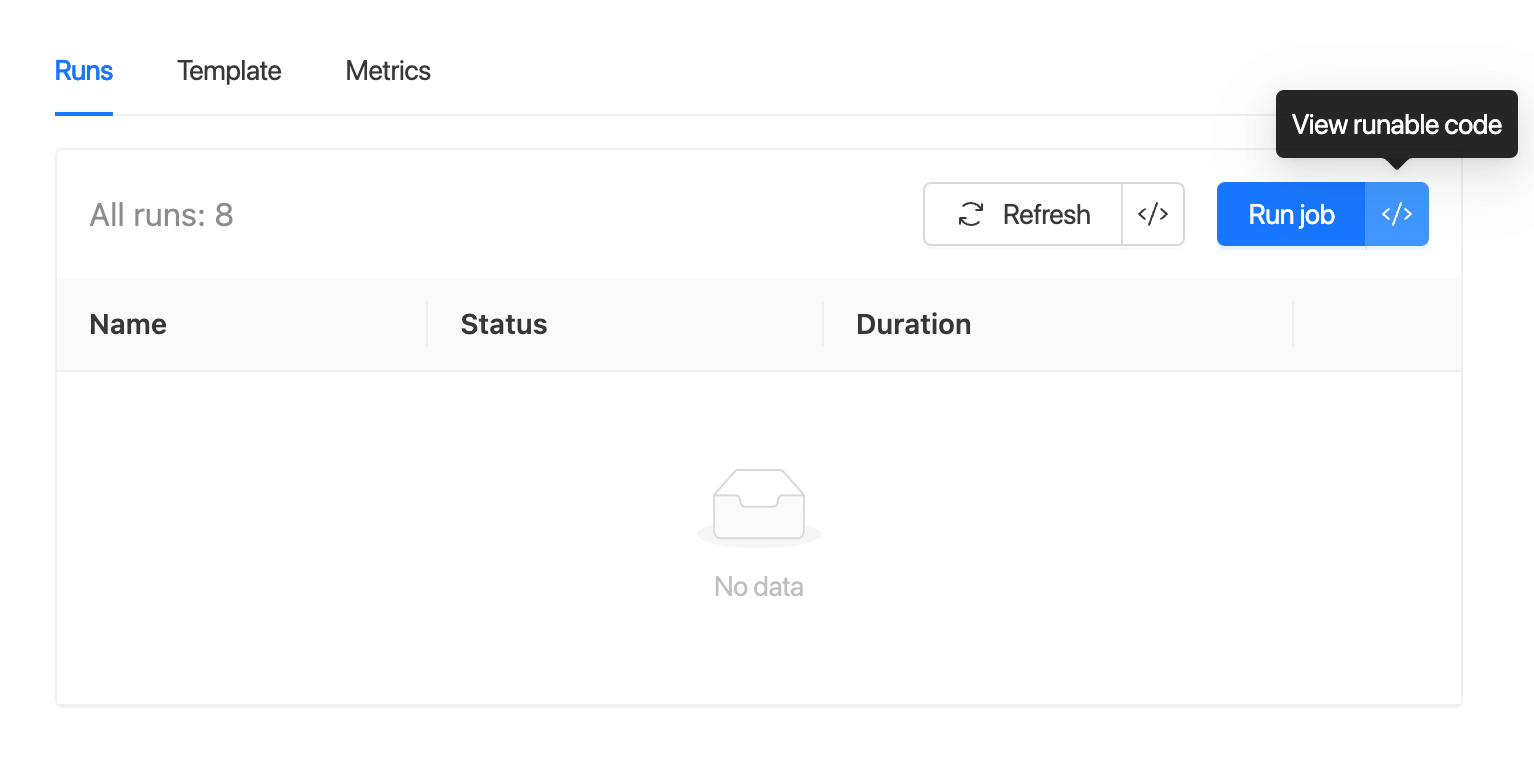

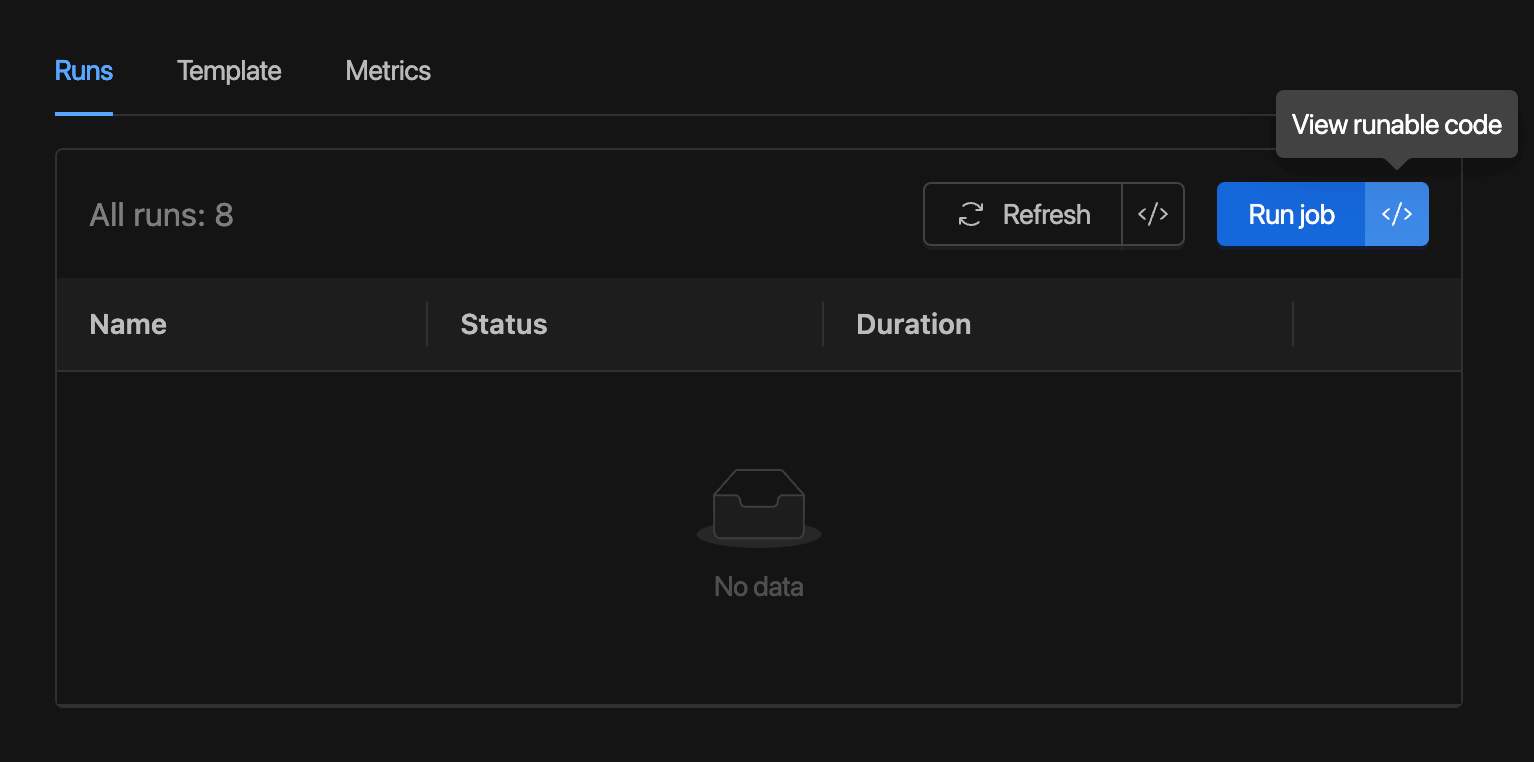

Once the Spark Job is created, you can run it using the IOMETE Control Plane UI or API.

To run it from the UI, go to the Spark Jobs page, go to the details of job and click on the Run button.

To run it from the API, click the button next to the button.

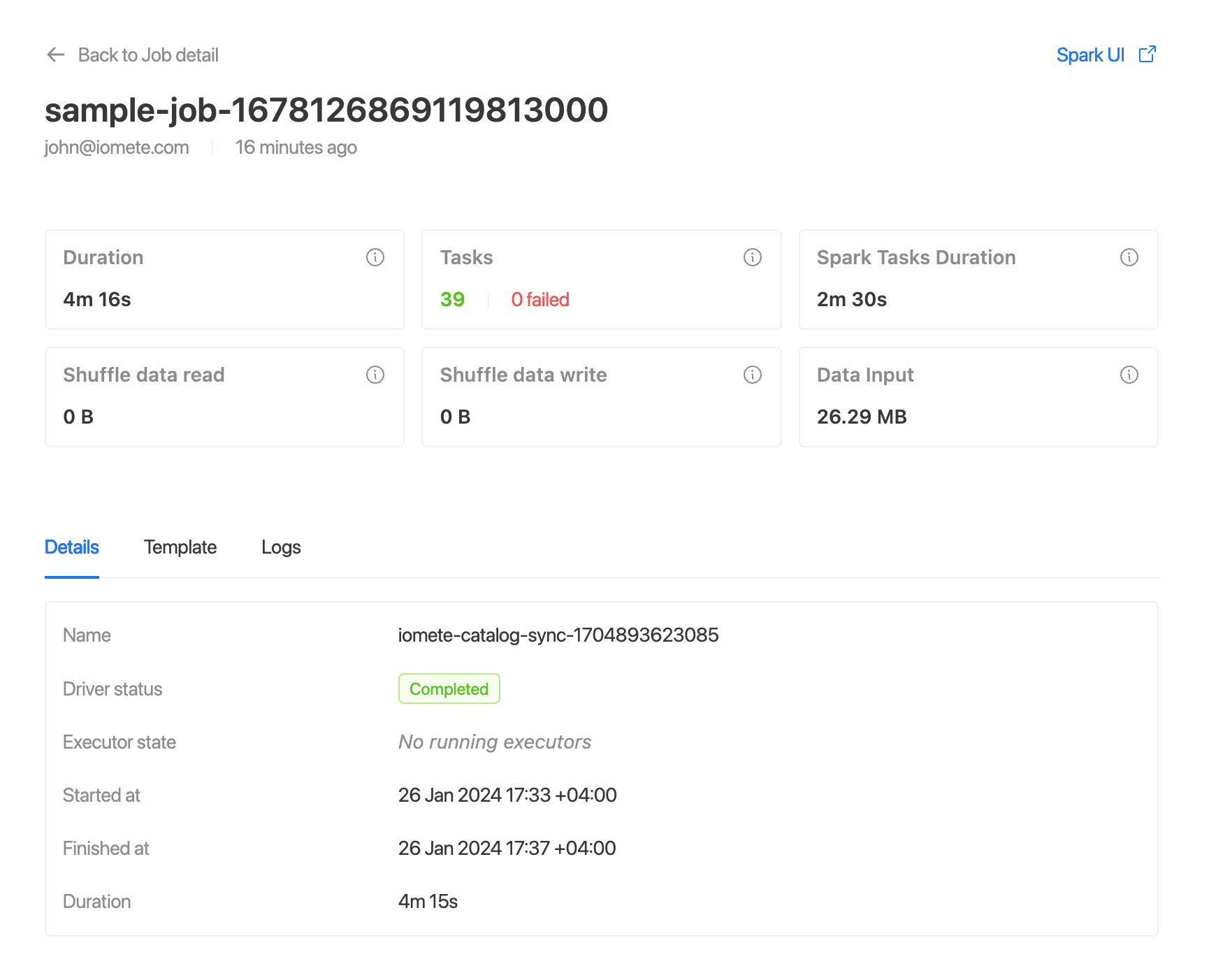

You can monitor and check the status of the Spark Job run from the Spark Job Runs page:

Congratulations 🎉🎉🎉