Query Scheduler Job

IOMETE provides Query Scheduler Job to run your queries over warehouse. You can run your queries on schedule time or manually. To enable job follow the next steps:

Installation

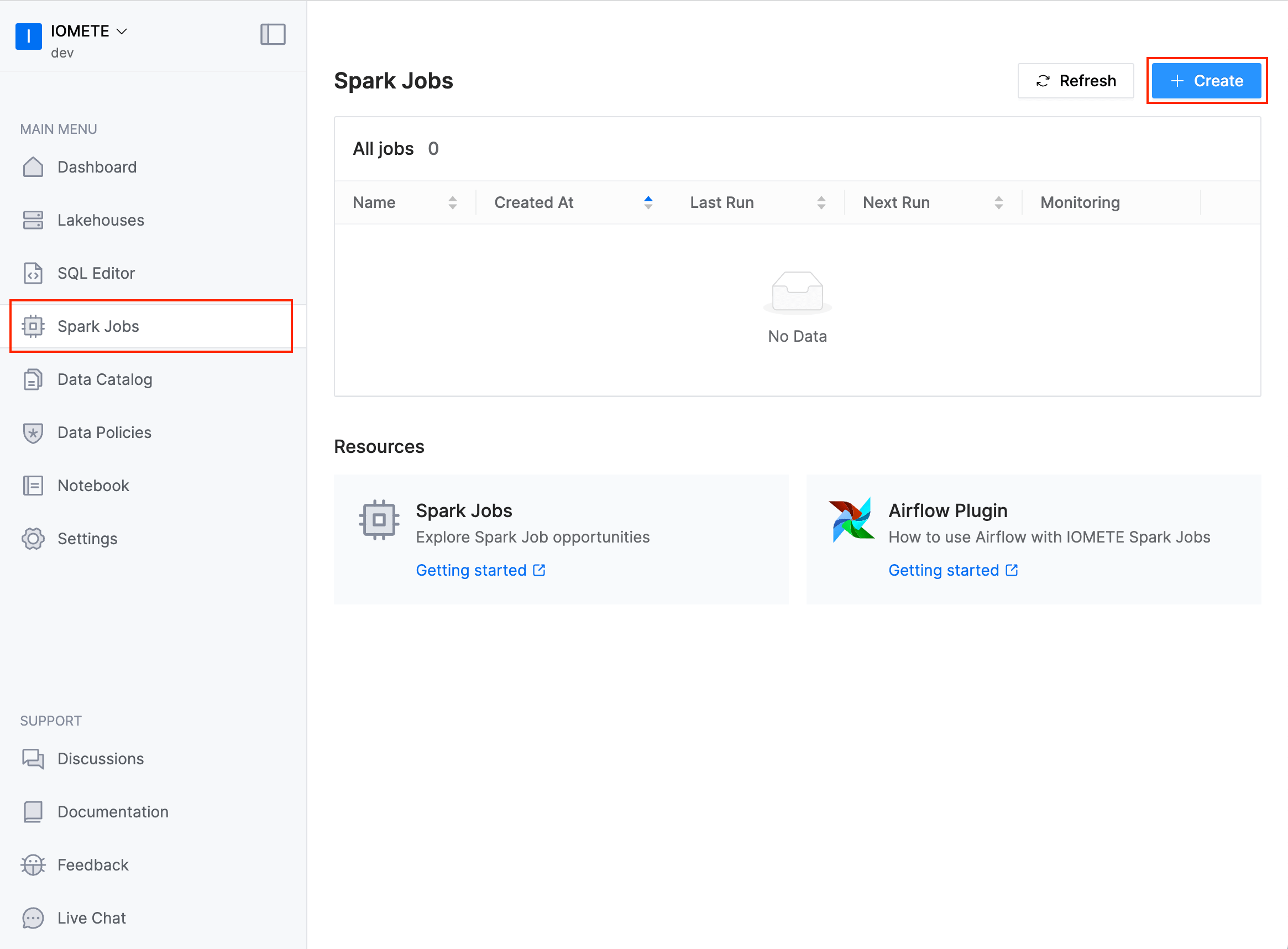

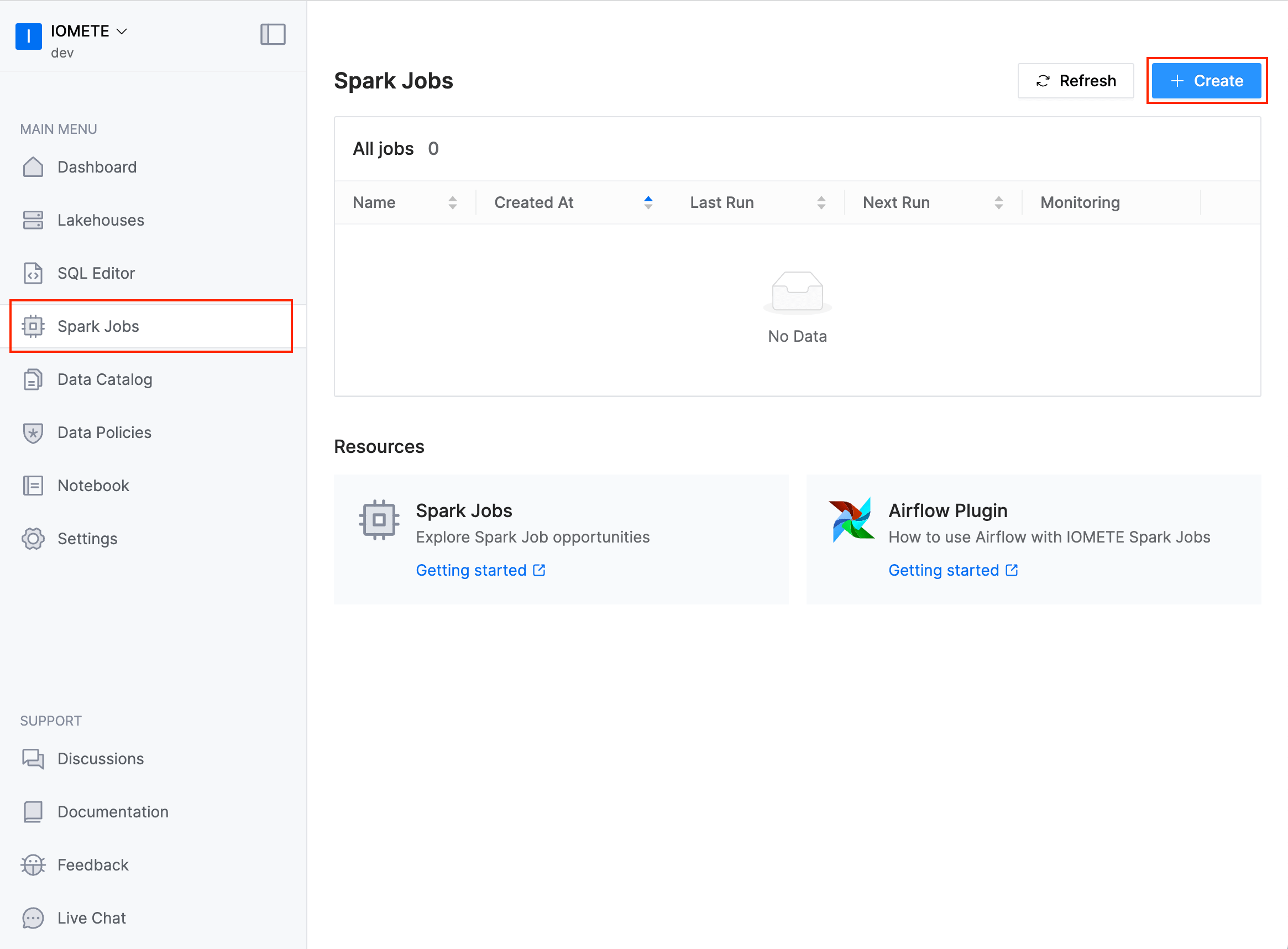

- In the left sidebar menu choose Spark Jobs

- Click on Create

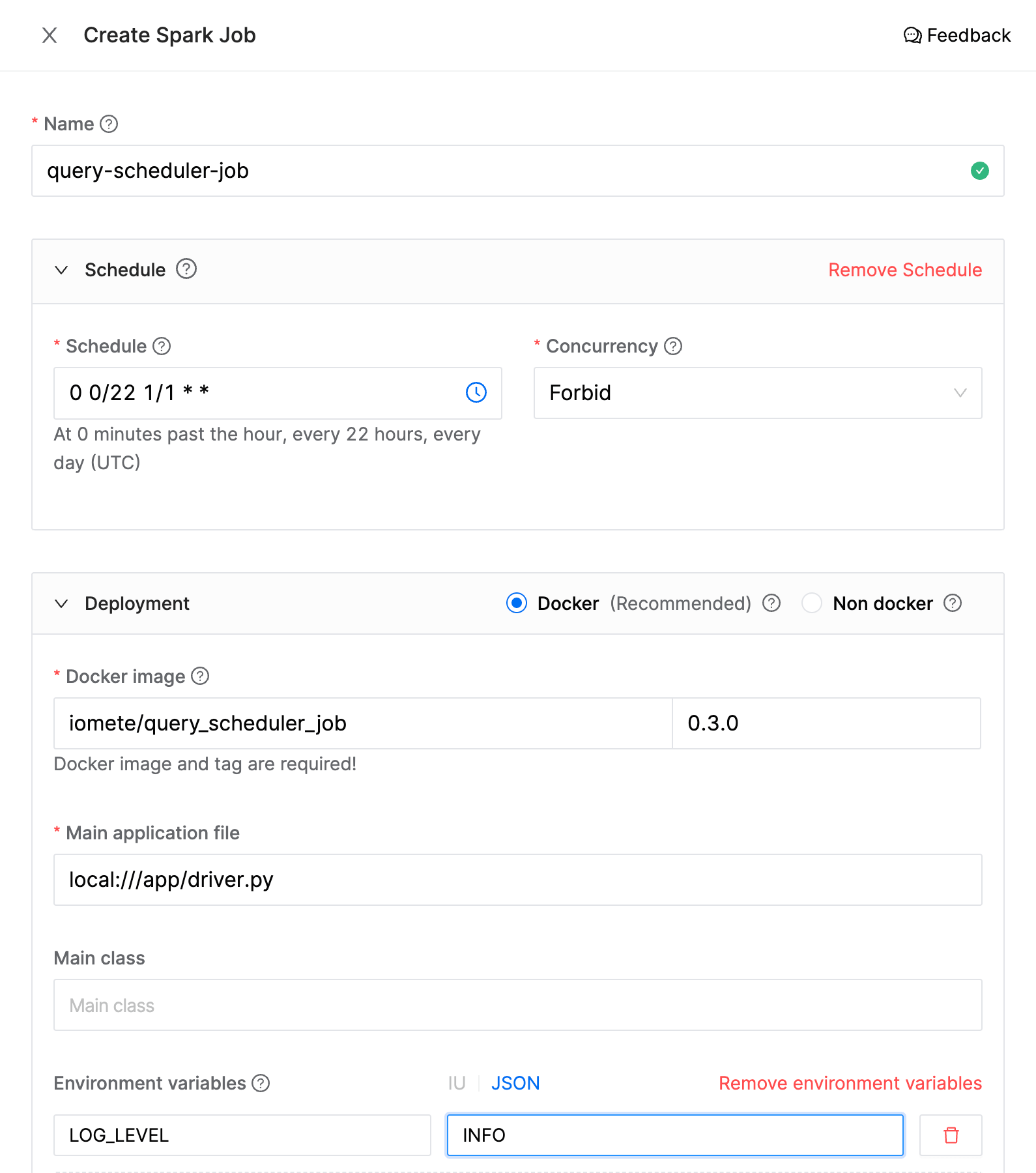

Specify the following parameters (these are examples, you can change them based on your preference):

- Name:

query-scheduler-job - Schedule:

0 0/22 1/1 * * - Docker image:

iomete/query_scheduler_job:0.3.0 - Main application file:

local:///app/driver.py - Environment variables:

LOG_LEVEL:INFO

-

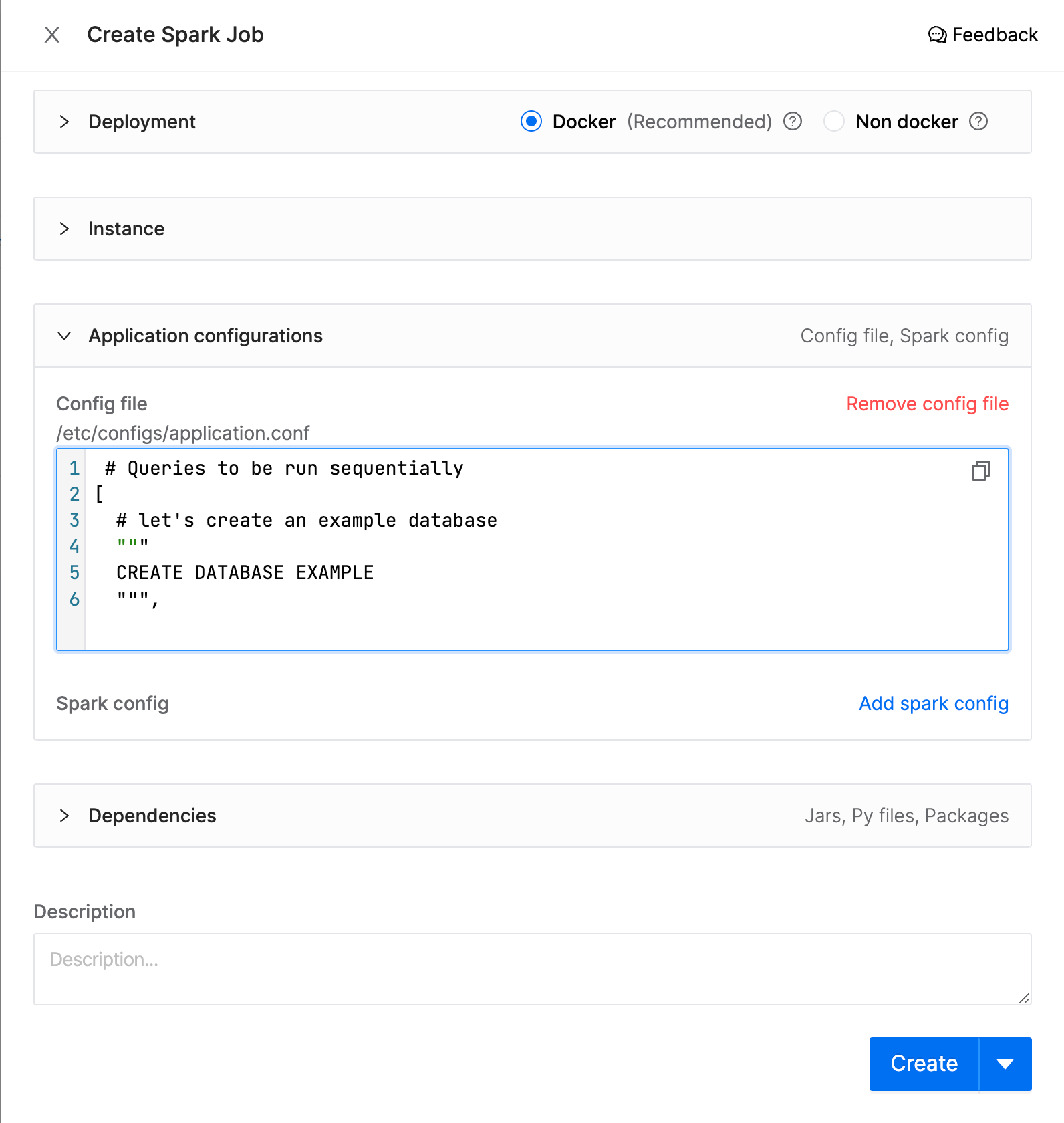

Config file: Scroll down and expand

Application configurationssection and clickAdd config file

# Queries to be run sequentially

[

# let's create an example database

"""

CREATE DATABASE EXAMPLE

""",

# use the newly created database to run the further queries within this database

"""

USE EXAMPLE

""",

# query example one

"""

CREATE TABLE IF NOT EXISTS dept_manager_proxy

USING org.apache.spark.sql.jdbc

OPTIONS (

url "jdbc:mysql://iomete-tutorial.cetmtjnompsh.eu-central-1.rds.amazonaws.com:3306/employees",

dbtable "employees.dept_manager",

driver 'com.mysql.cj.jdbc.Driver',

user 'tutorial_user',

password '9tVDVEKp'

)

""",

# another query that depends on the previous query result

"""

CREATE TABLE IF NOT EXISTS dept_manager AS SELECT * FROM dept_manager_proxy

"""

]

And, hit the create button.

Summary

You can find source code in Github. Feel free to customize code for your requirements. Please do not hesitate to contact us if you have any question

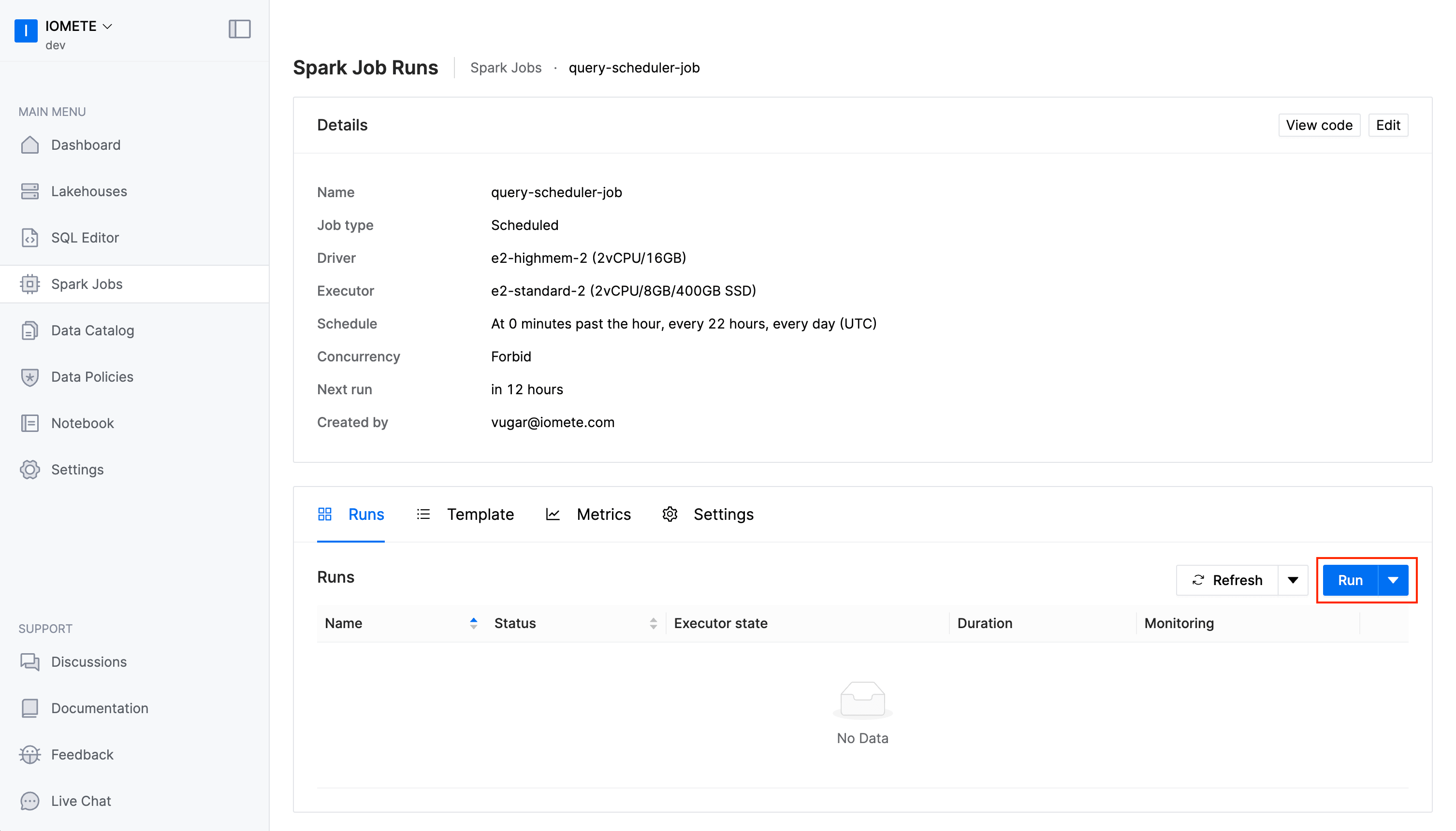

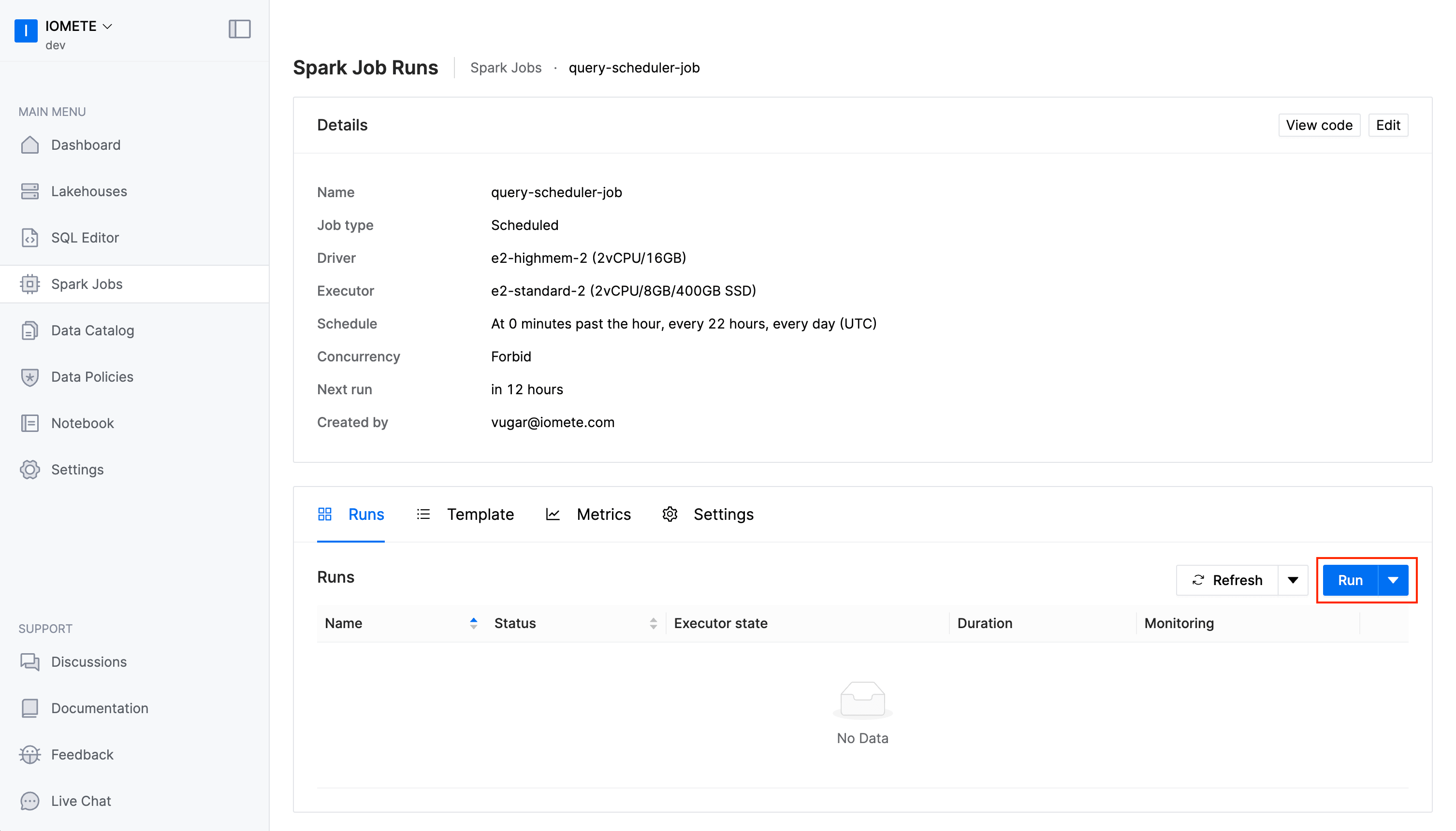

The job will be run based on the defined schedule. But, you can trigger the job manually by clicking on the Run button.