File Streaming

Transfer files to iceberg continuously.

File formats

Tested file formats.

- CSV

Job creation

- In the left sidebar menu choose Spark Jobs

- Click on Create

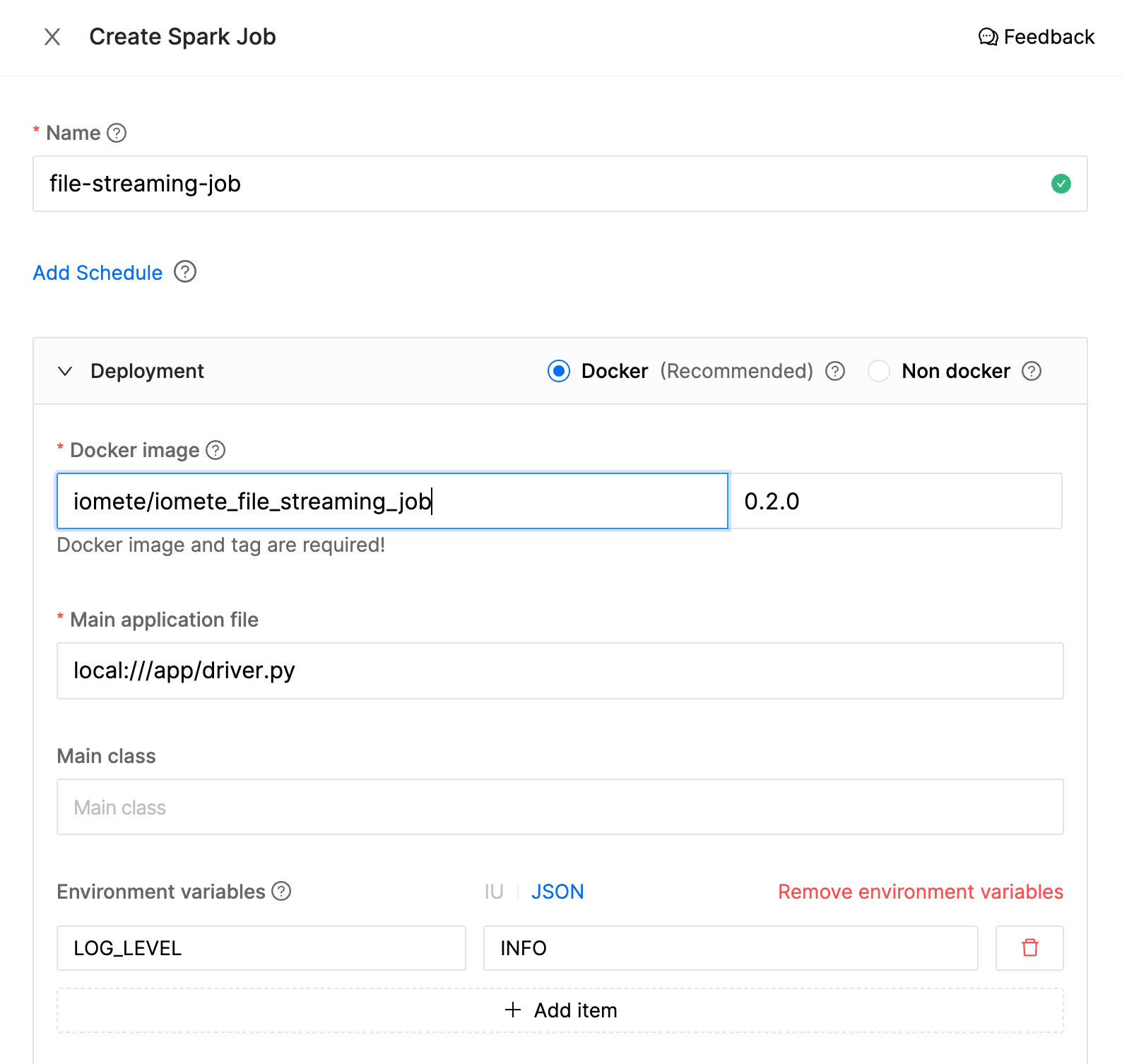

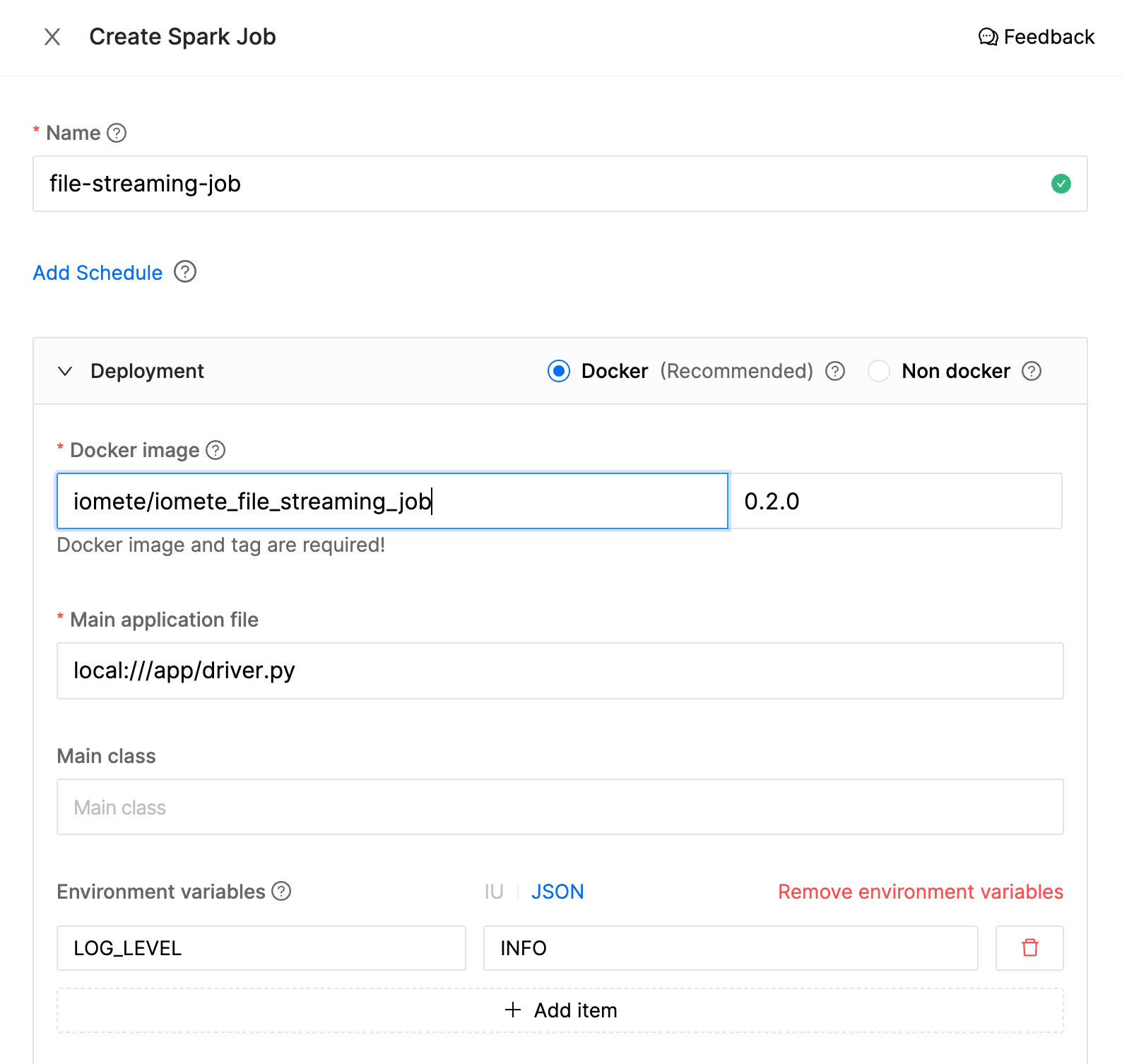

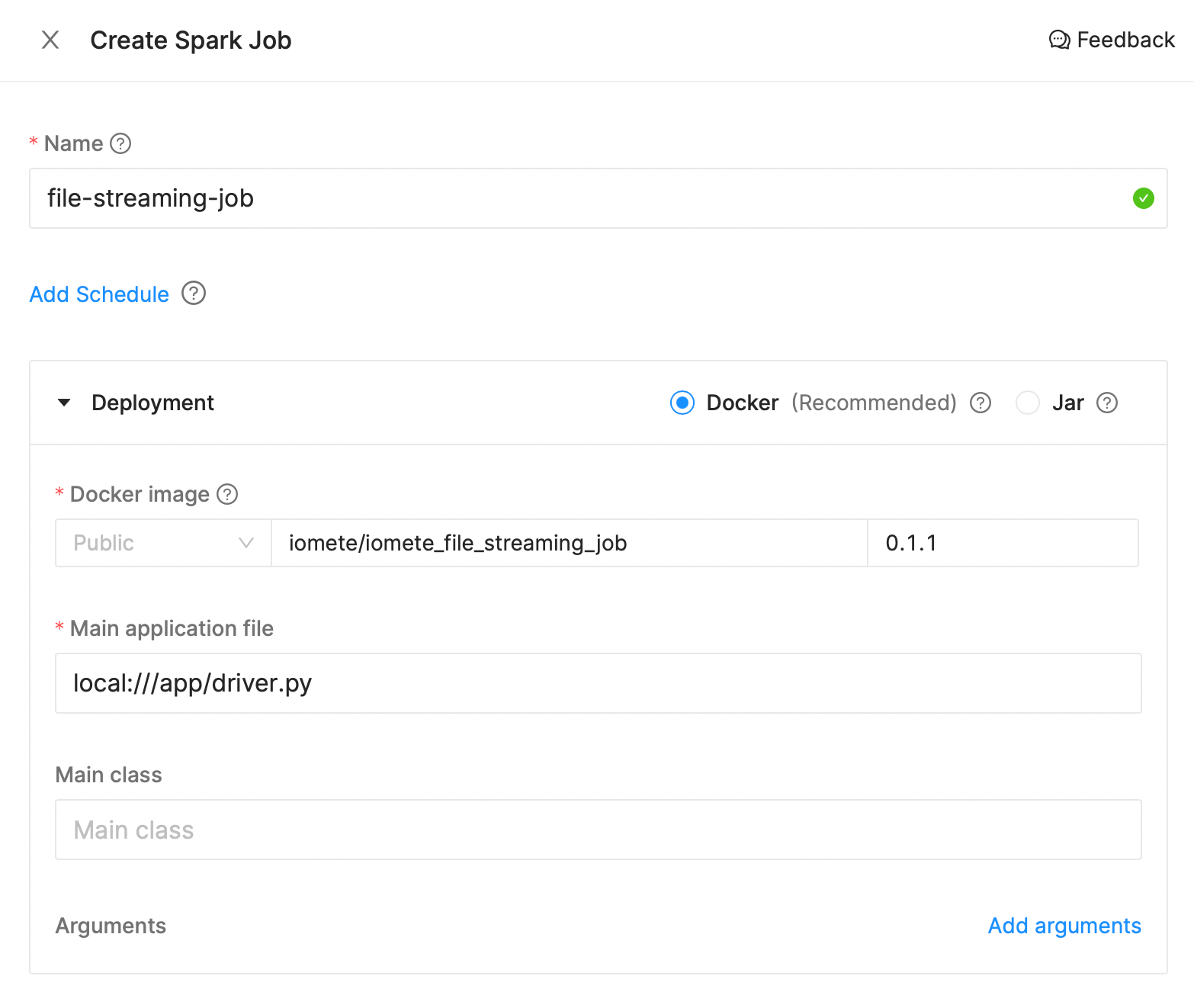

Specify the following parameters (these are examples, you can change them based on your preference):

- Name:

file-streaming-job - Docker image:

iomete/iomete_file_streaming_job:0.2.0 - Main application file:

local:///app/driver.py - Environment variables:

LOG_LEVEL:INFOor ERROR

You can use Environment variables to store your sensitive variables like password, secrets, etc. Then you can use these variables in your config file using the ${DB_PASSWORD} syntax.

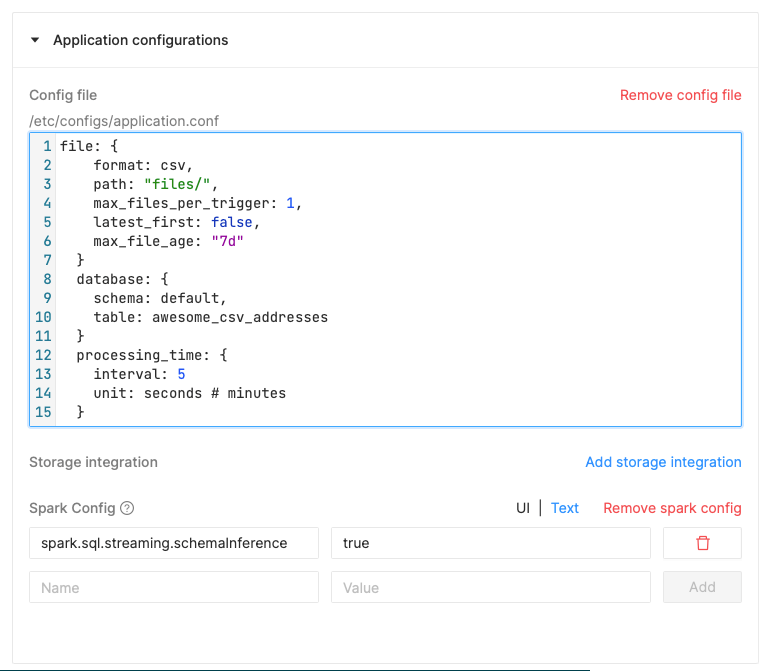

Config file

-

Config file: Scroll down and expand

Application configurationssection and clickAdd config fileand paste following JSON.

{

file: {

format: csv,

path: "files/",

max_files_per_trigger: 1,

latest_first: false,

max_file_age: "7d"

}

database: {

schema: default,

table: awesome_csv_addresses

}

processing_time: {

interval: 5

unit: seconds # minutes

}

}

Configuration properties

| Property | Description |

|---|---|

file | Required properties to connect and configure.

|

database | Destination database properties.

|

processing_time | Processing time to persist incoming data on iceberg.

|

Create Spark Job - Deployment

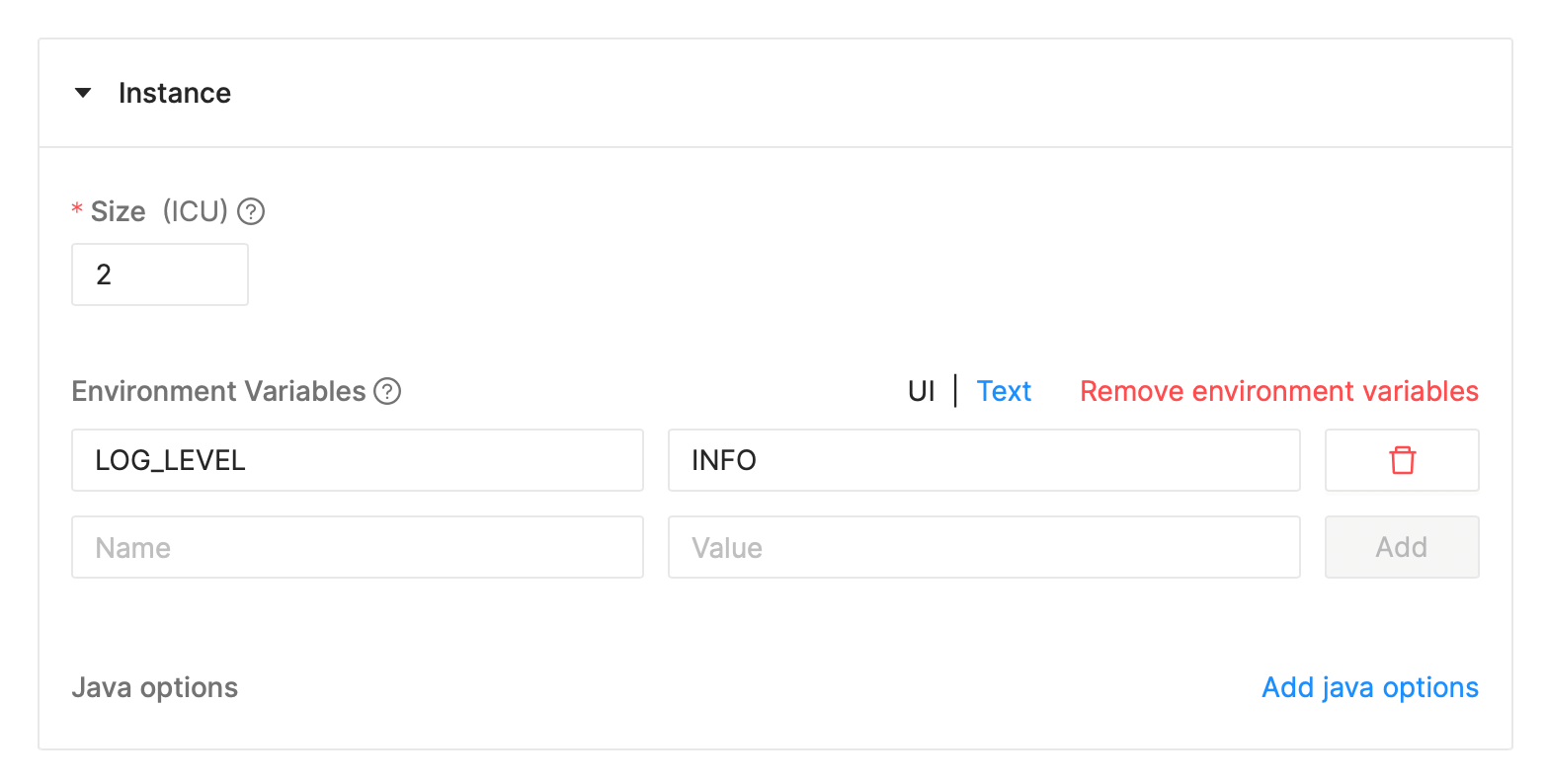

Create Spark Job - Instance

You can use Environment Variables to store your sensitive data like password, secrets, etc. Then you can use these variables in your config file using the ${ENV_NAME} syntax.

Create Spark Job - Application Config

Tests

Prepare the dev environment

virtualenv .env #or python3 -m venv .env

source .env/bin/activate

pip install -e ."[dev]"

Run test

python3 -m pytest # or just pytest