Data Catalog Sync Job

The Data Catalog Sync Job scans all catalogs in your IOMETE environment and indexes their tables. Once indexed, tables appear in the Data Catalog page where you can:

- Add documentation and descriptions

- Assign owners

- Define classifications

- Configure maintenance properties

Latest version: 5.0.0 (requires IOMETE 3.16.x or later)

Installation

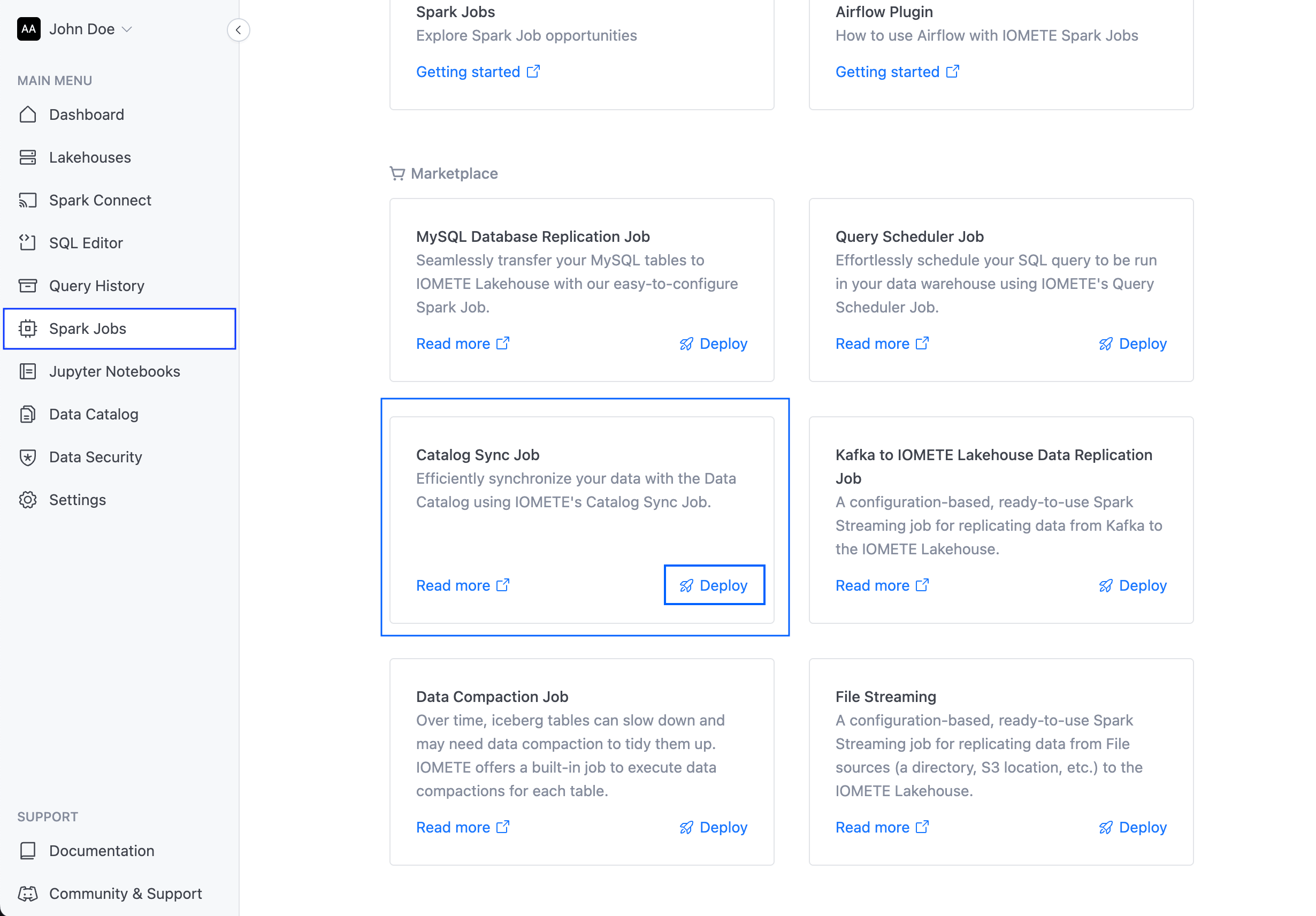

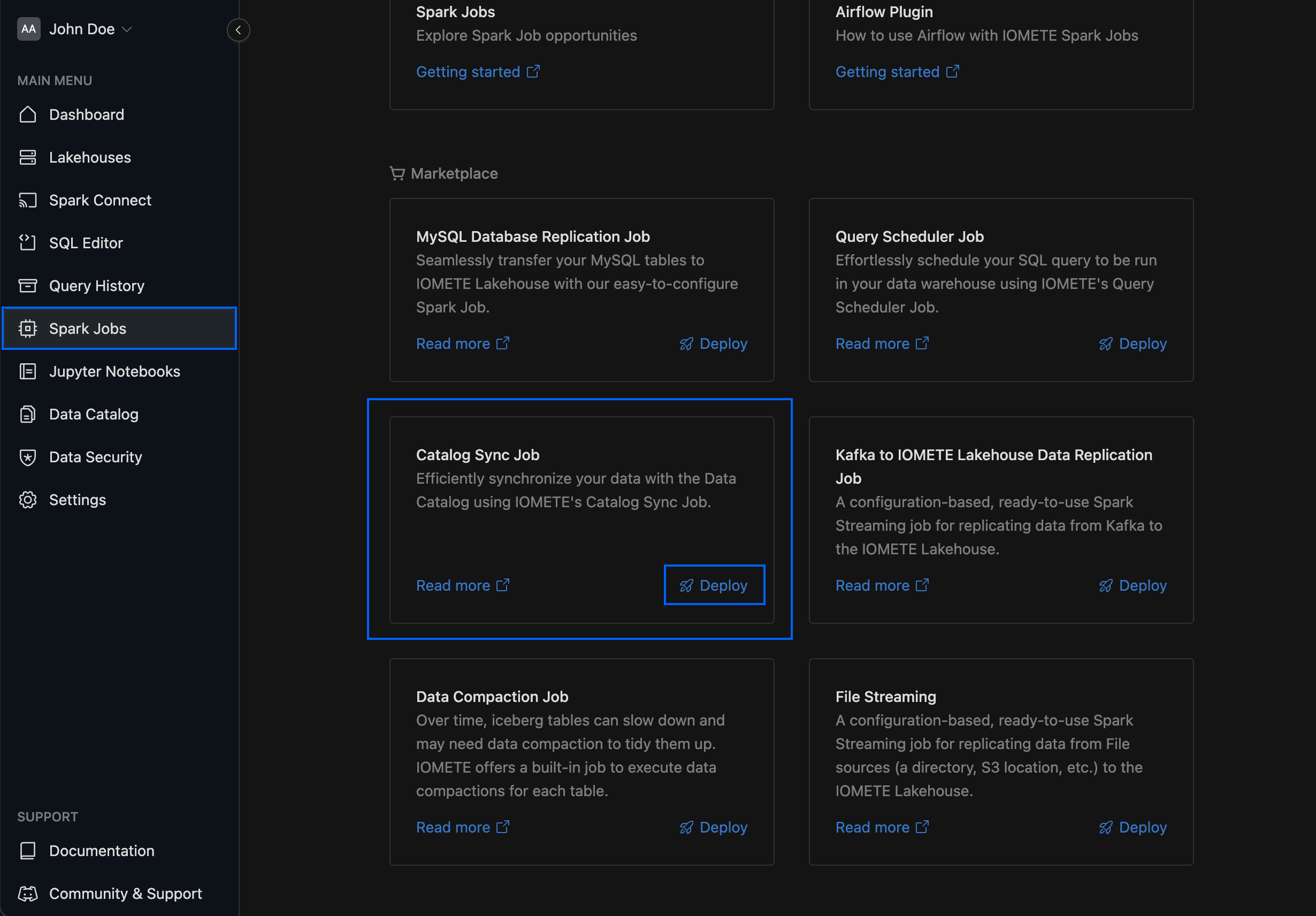

From the Marketplace

Search for Data Catalog Sync in the Spark Job Marketplace and click Deploy.

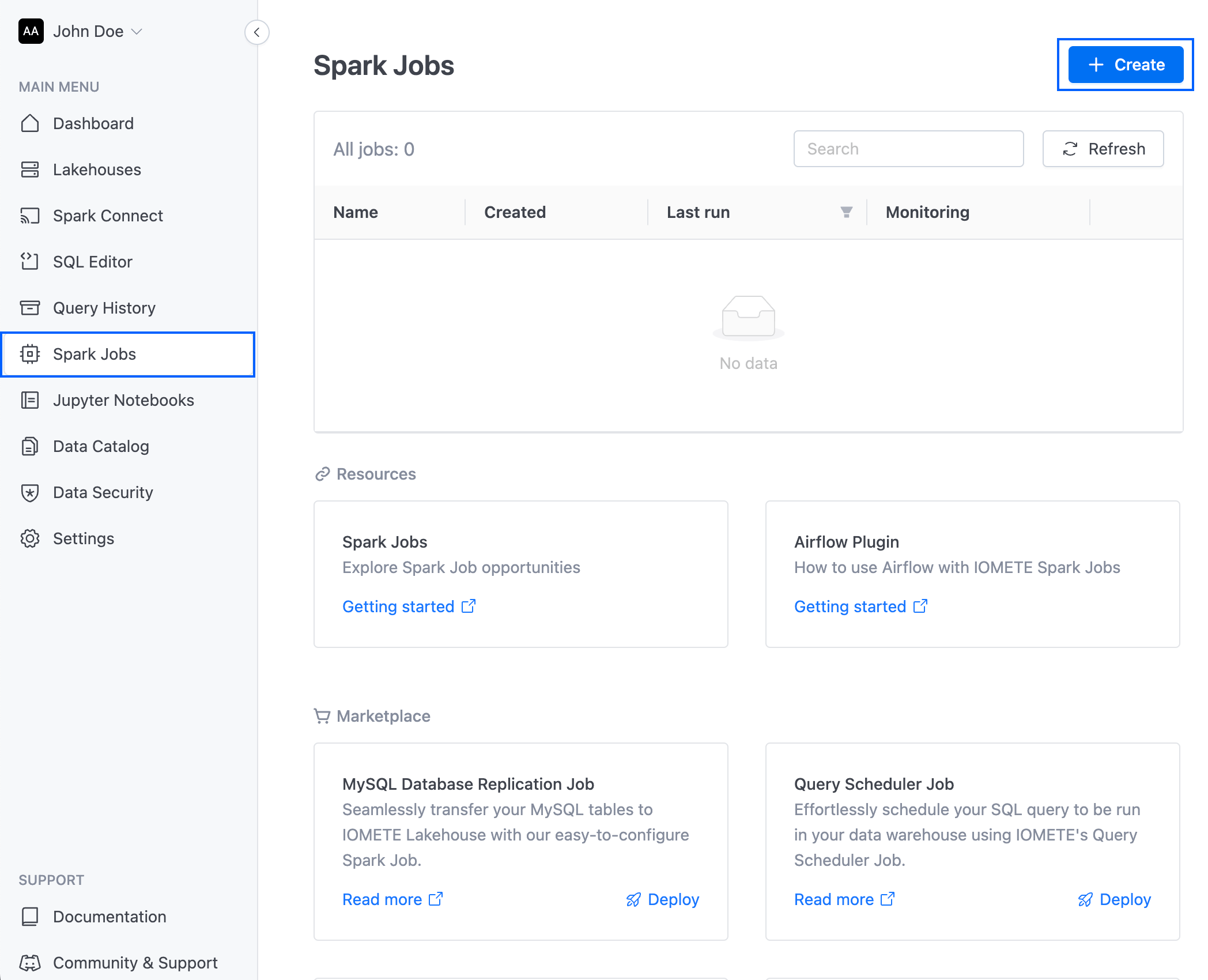

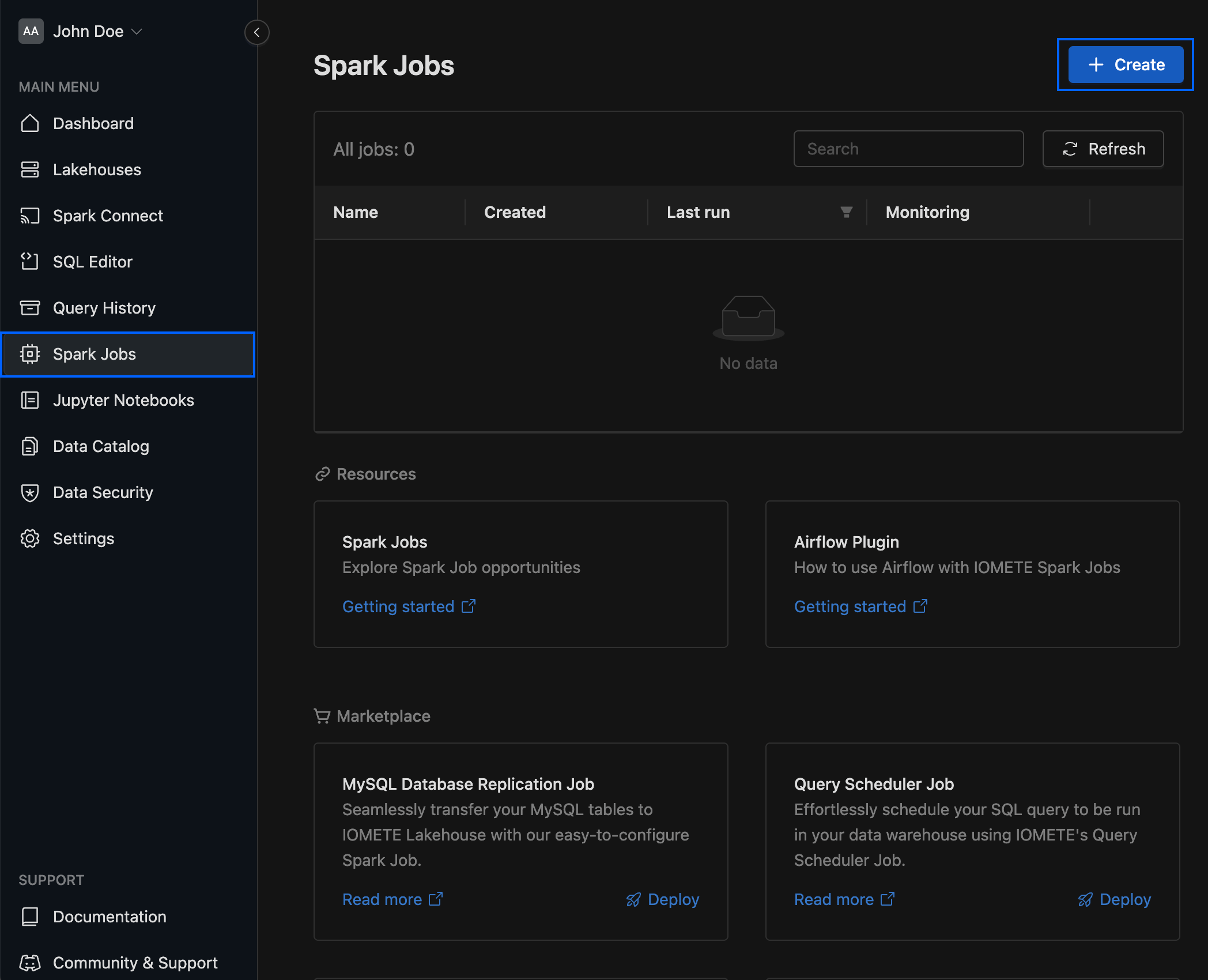

Manual Setup

- Go to Spark Jobs in the sidebar

- Click Create

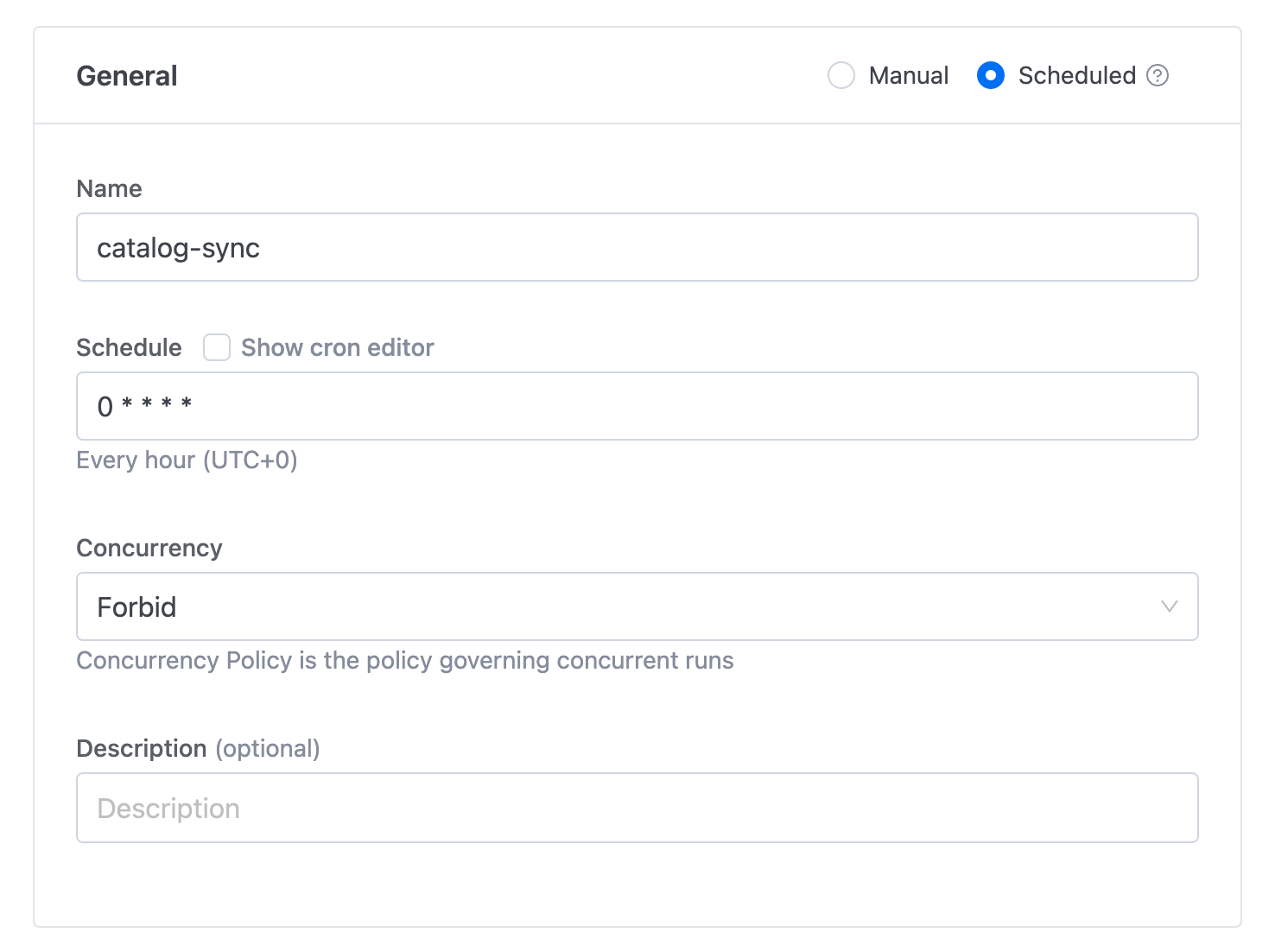

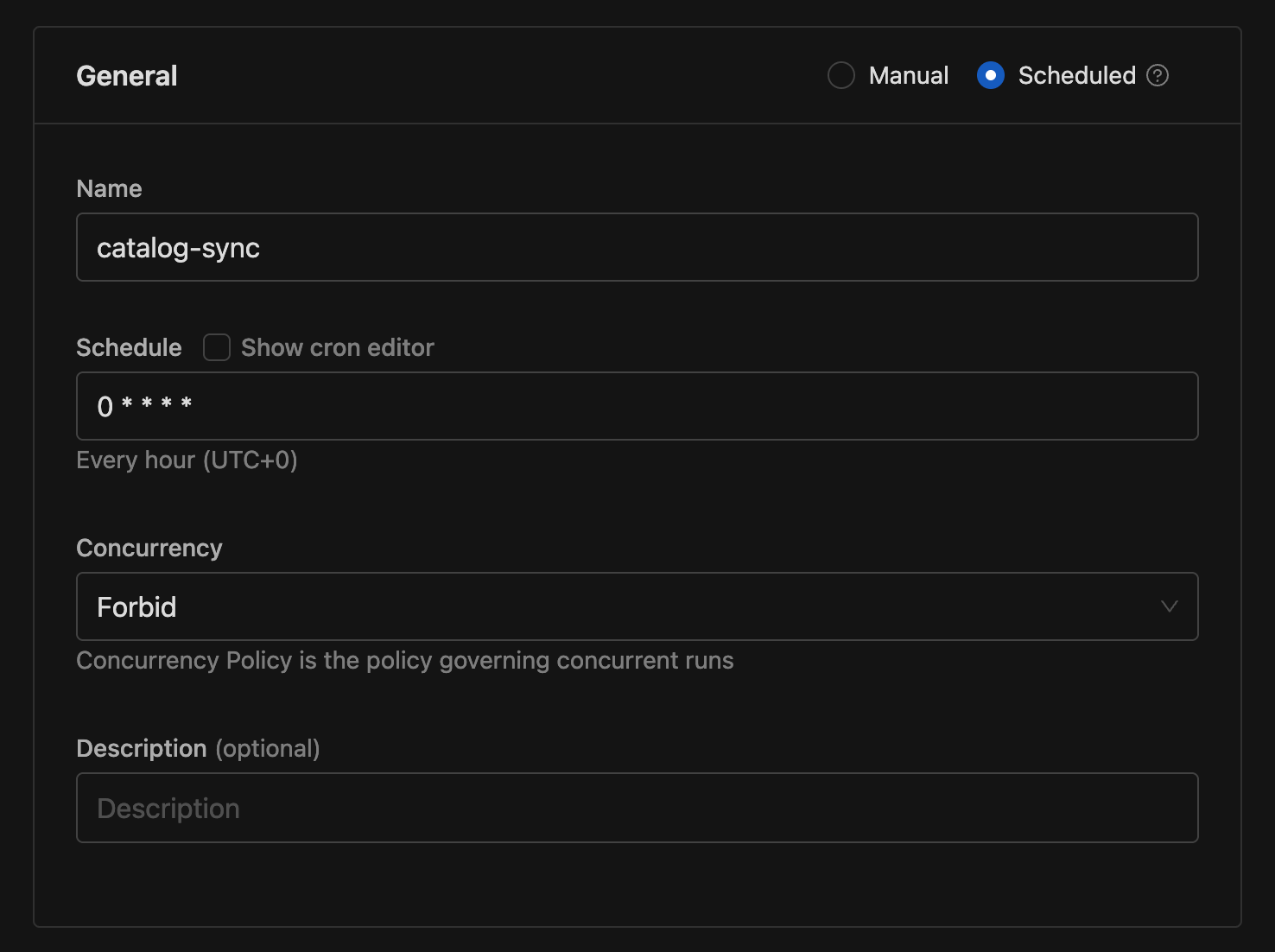

Fill in the following fields:

- Name:

catalog-sync - Schedule:

0 * * * *(runs every hour) - Concurrency:

FORBID

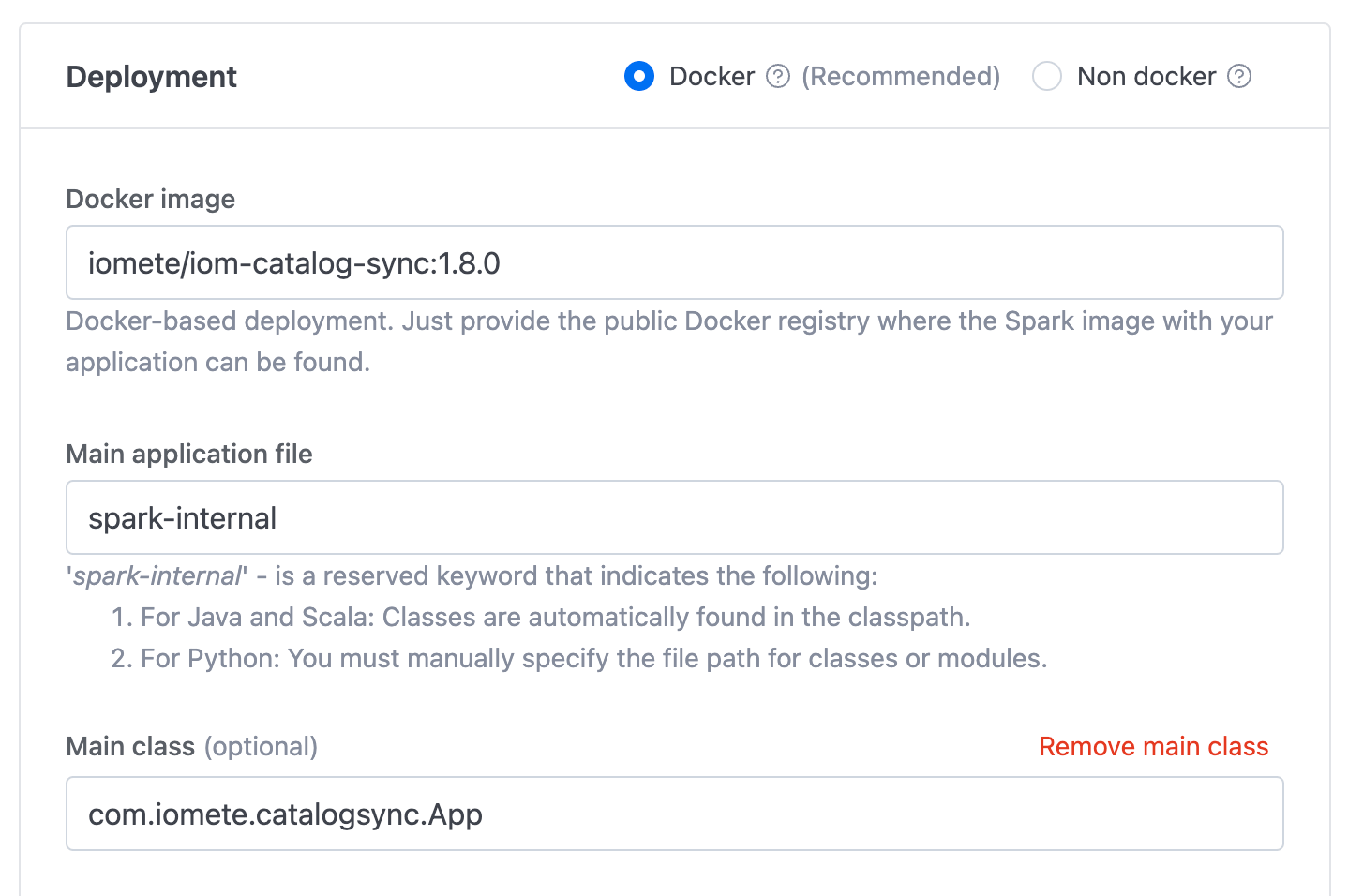

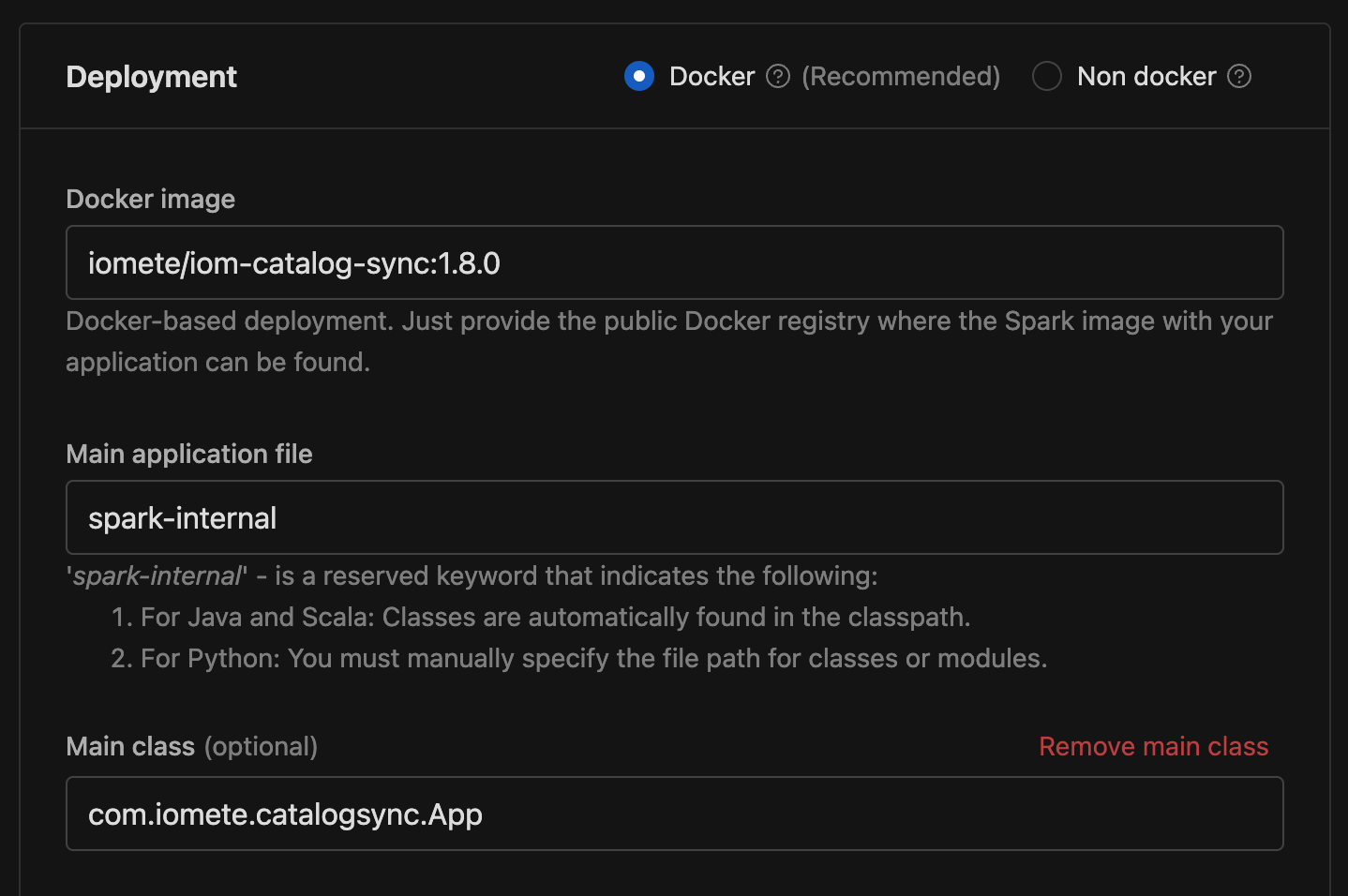

- Deployment:

-

Docker image:

iomete/iom-catalog-sync:5.0.0 -

Main Application File:

spark-internal -

Main Class:

com.iomete.catalogsync.App

-

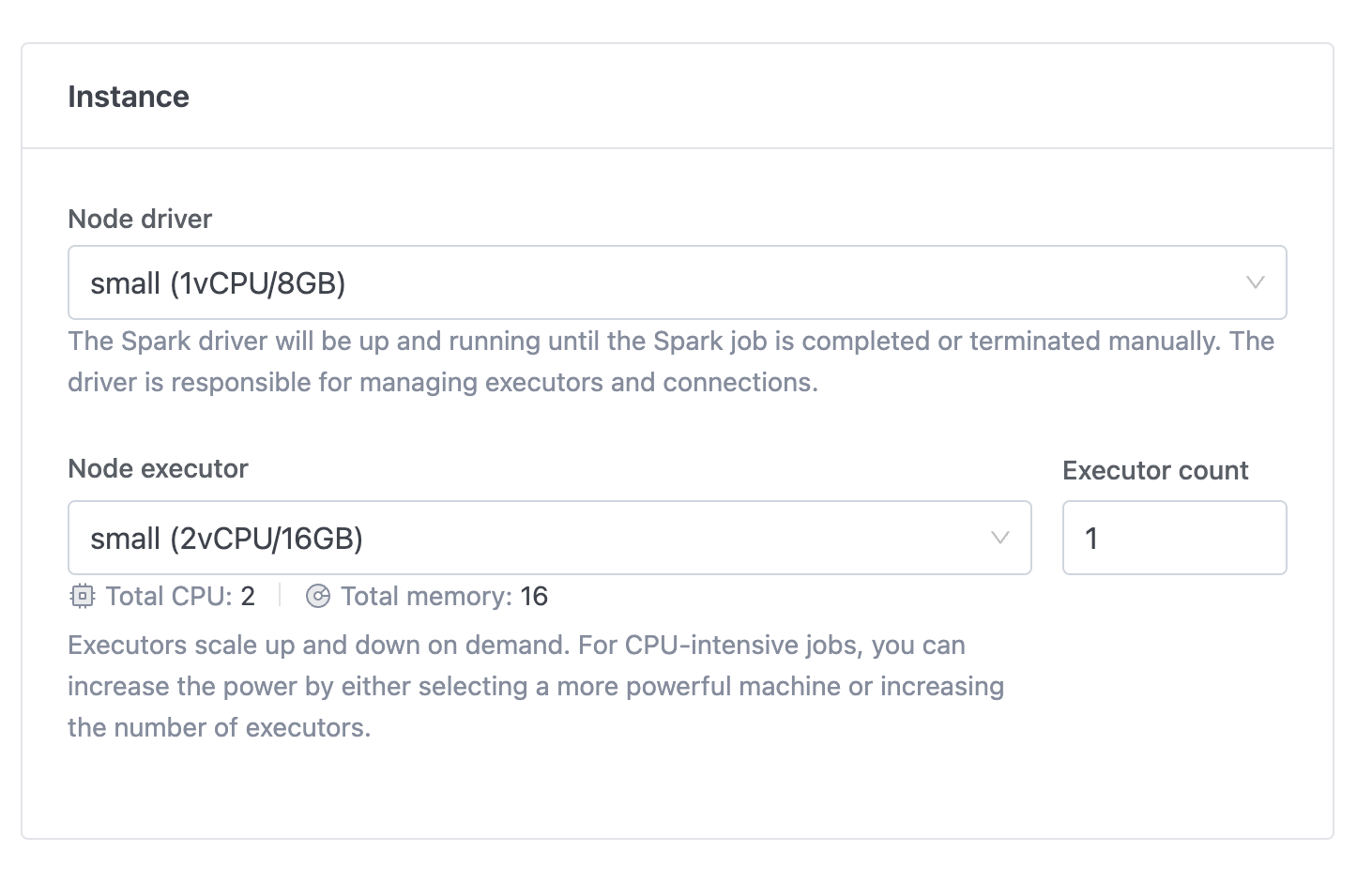

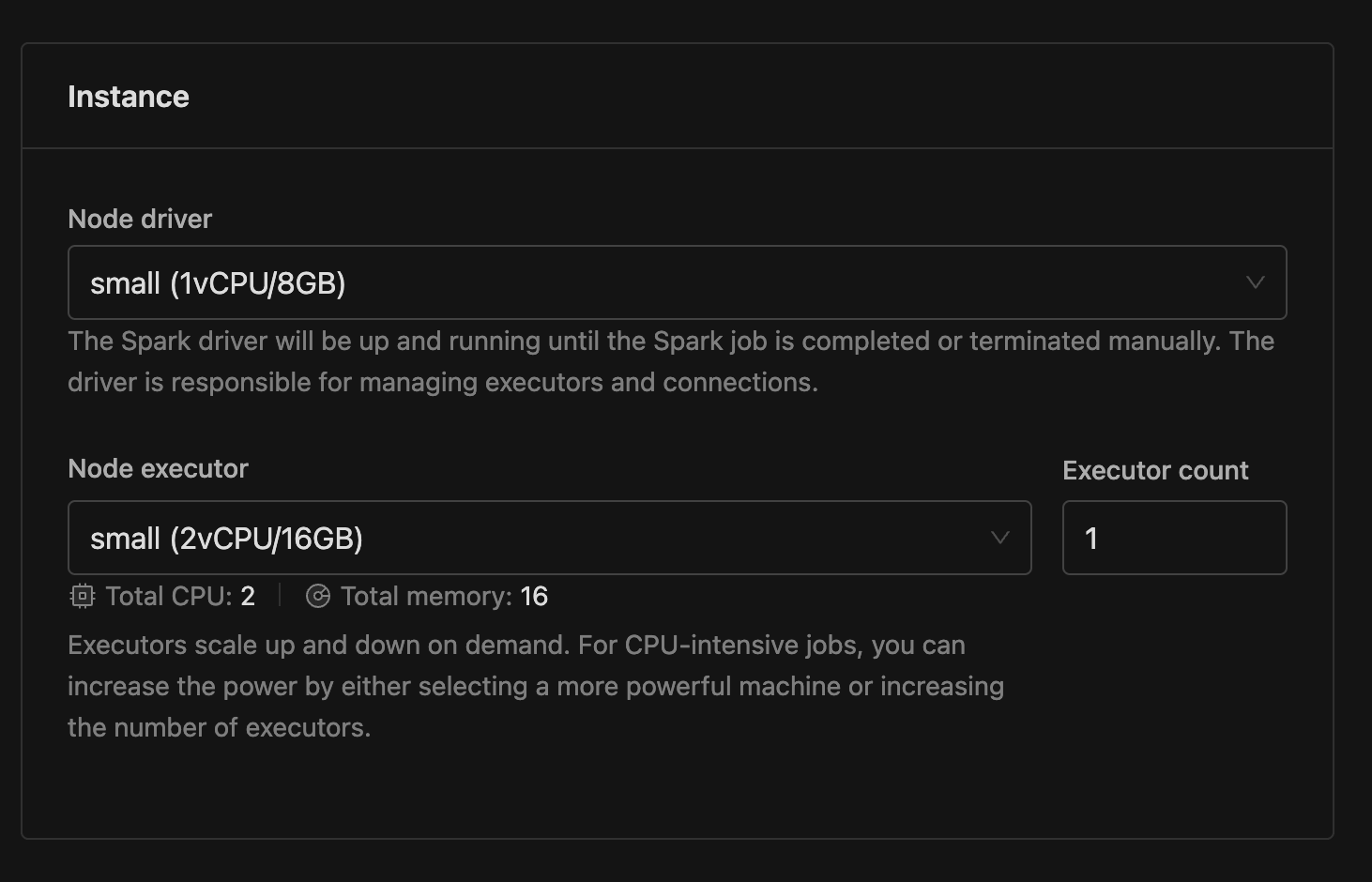

Instance Config:

-

Driver Type:

driver-small -

Executor Type:

exec-small -

Executor Count:

1

Click Create to save the job.

-

-

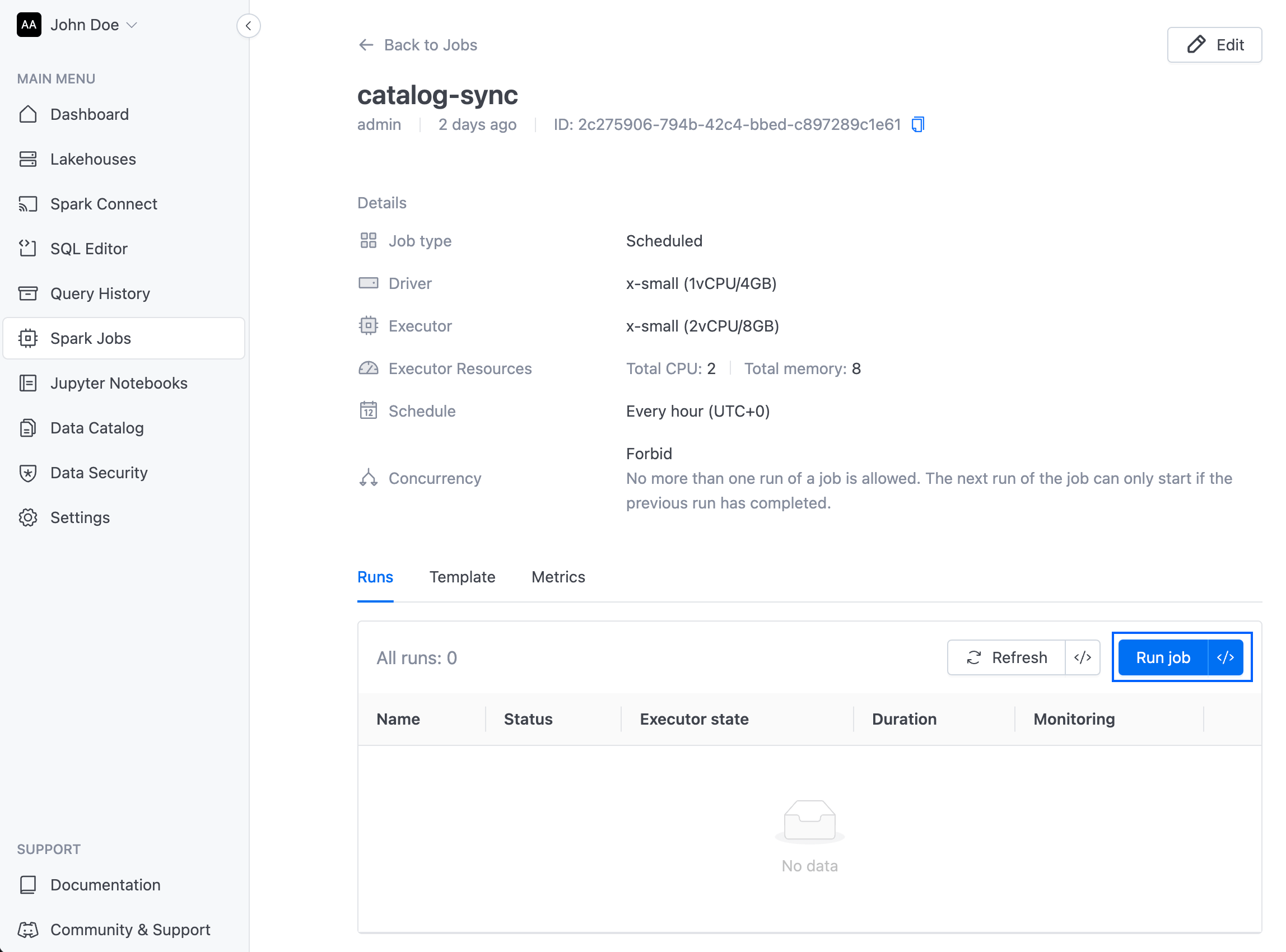

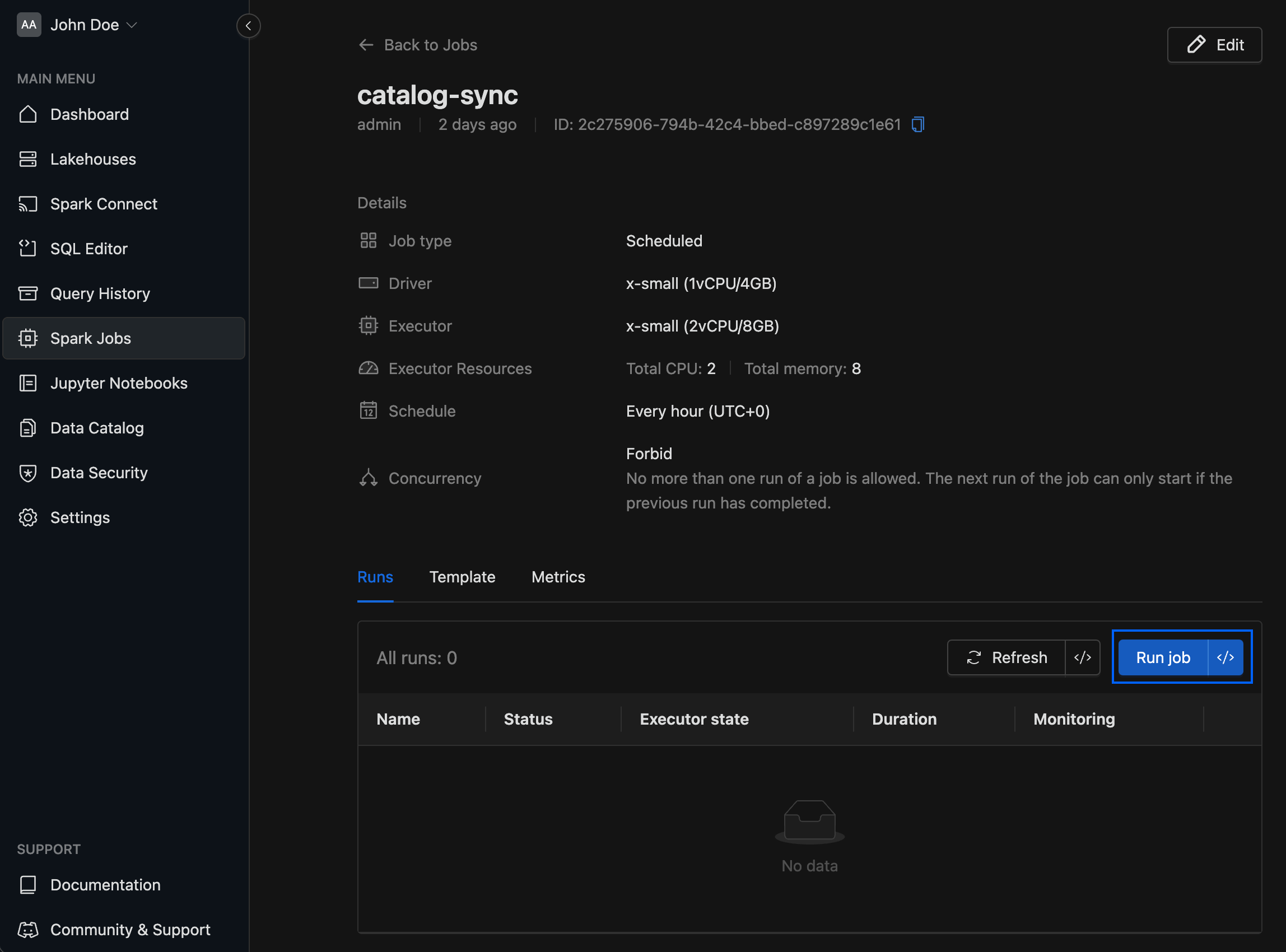

Running the Job

The job runs automatically based on its schedule. To trigger it manually, click Run job.

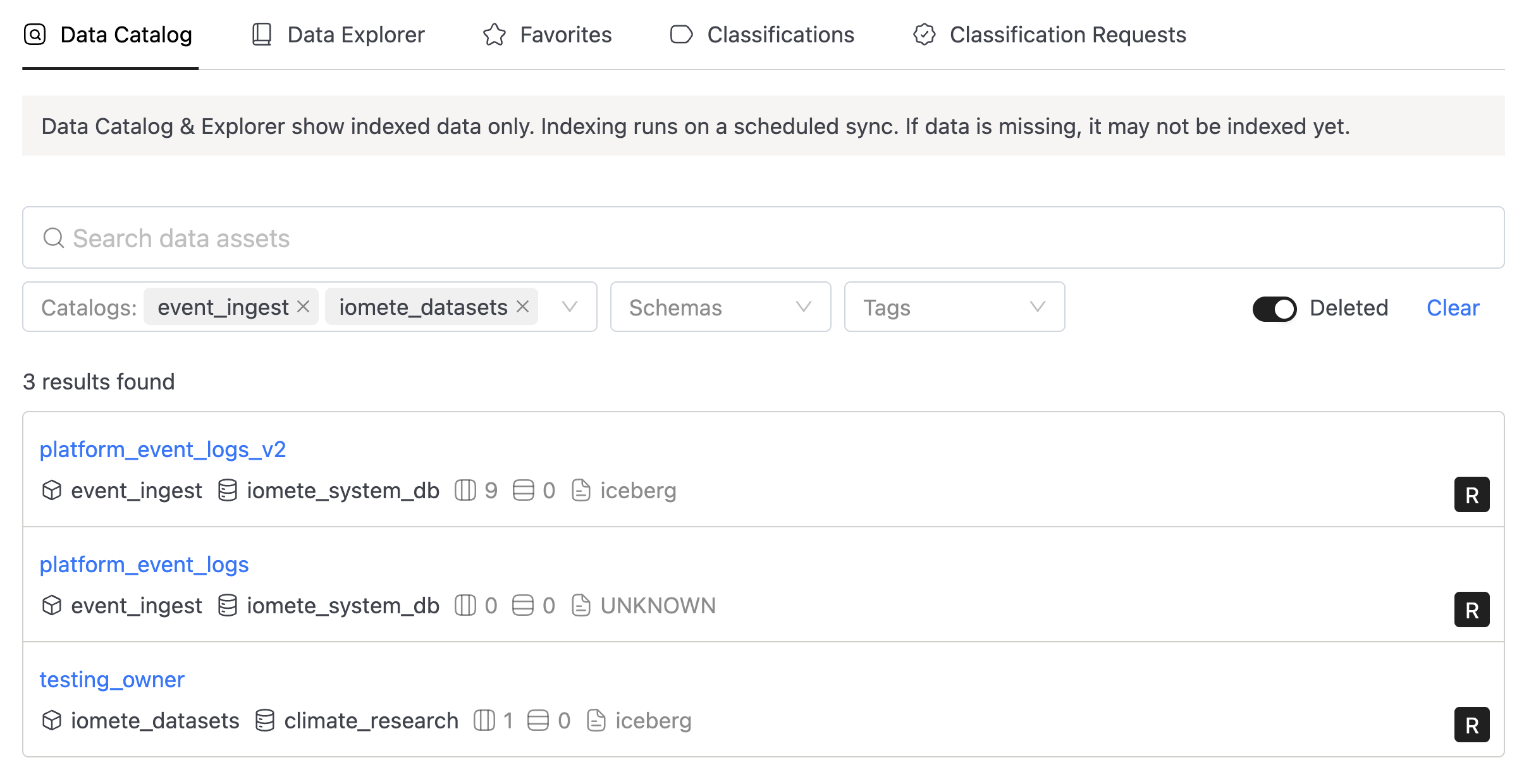

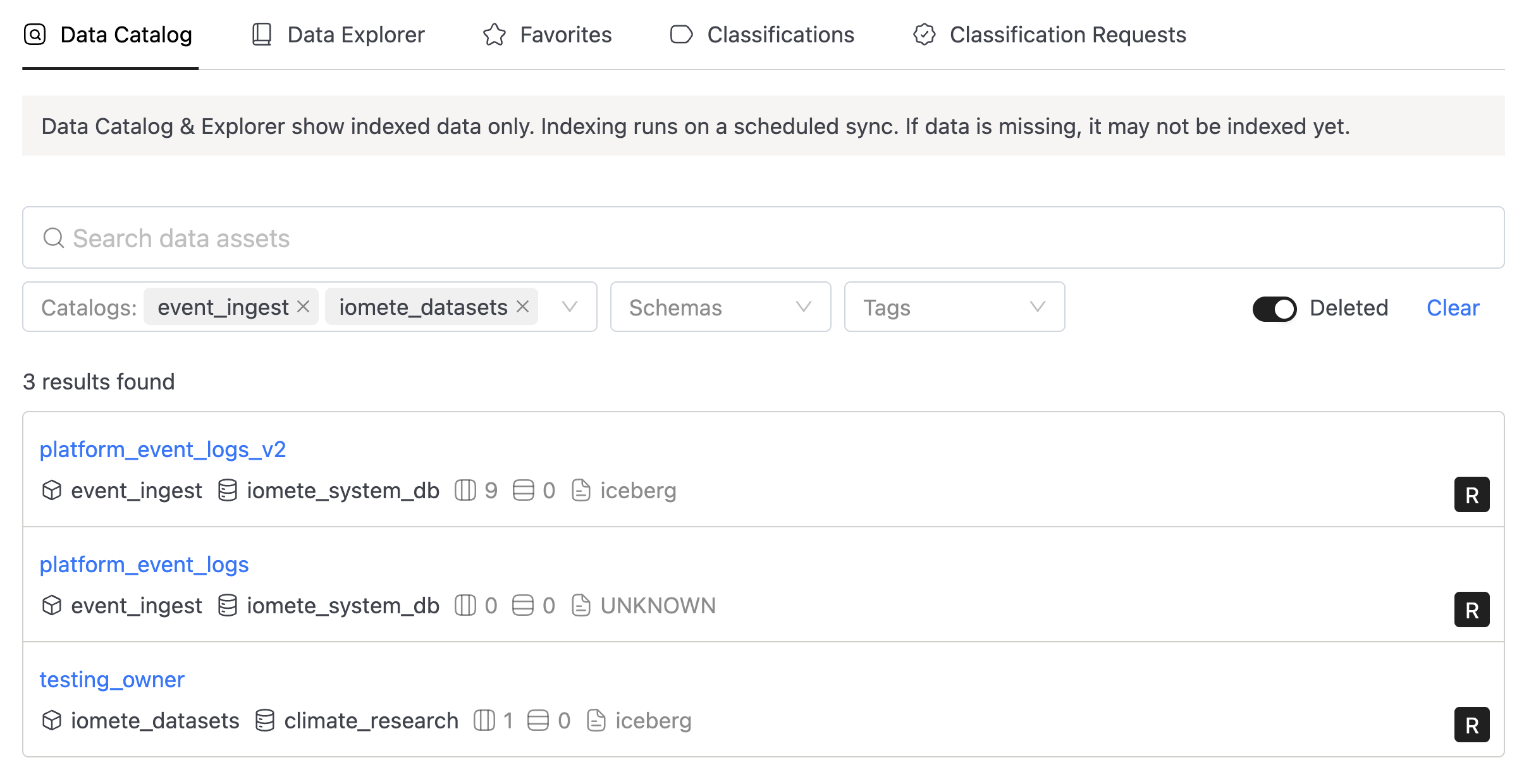

Deleted Tables

When a table is dropped from a catalog, it is not removed from the Data Catalog index. Instead, it is marked as deleted and hidden from the default view.

To see deleted tables, use the Deleted toggle in the Data Catalog UI. This lets you review what was removed and when.

Deleted entries are currently kept indefinitely. Automatic cleanup of old deleted entries is planned for a future release.

Exclusion Rules

Not every catalog needs to be indexed. For example, you may want to skip:

- An external catalog (e.g., Oracle) with millions of tables that would clutter search results

- Catalogs or schemas containing sensitive data that shouldn't appear in the Data Catalog

- Personal or sandbox environments used for development

You can configure exclusion rules in the job configuration to skip specific catalogs, namespaces (schemas/databases), or tables during sync.

Default Rule

The default rule applies at all levels — catalogs, schemas, and tables. If an asset has a matching property, it is excluded from indexing.

{

"exclusion_rules": {

"default_rule": {

"filter_by_properties": {

"iomete.governance.index": "false"

}

}

}

}

With this rule in place, setting the property iomete.governance.index=false on any catalog, schema, or table will exclude it from the sync.

This is the simplest setup — define one rule and apply it wherever needed using properties.

Excluding Catalogs

Catalogs can be excluded by name, by property, or both.

By name

List catalog names directly. These catalogs will always be skipped, regardless of their properties:

{

"exclusion_rules": {

"catalogs": {

"names": ["sandbox_dev", "legacy_warehouse", "user_personal_spaces"]

}

}

}

By property

Set the iomete.governance.index property to false on the catalog in the Admin Portal when creating or editing it.

Then add the corresponding filter:

{

"exclusion_rules": {

"catalogs": {

"filter_by_properties": {

"iomete.governance.index": "false"

}

}

}

}

Combining both

You can use names and properties together. A catalog is excluded if it matches either condition:

{

"exclusion_rules": {

"catalogs": {

"names": ["sandbox_dev", "legacy_warehouse"],

"filter_by_properties": {

"iomete.governance.index": "false"

}

}

}

}

Excluding Namespaces (Schemas/Databases)

Namespaces are excluded by property. To mark a namespace for exclusion, set the property using SQL:

ALTER DATABASE my_catalog.my_schema SET DBPROPERTIES ('iomete.governance.index' = 'false');

To verify the property:

DESCRIBE DATABASE EXTENDED my_catalog.my_schema;

To remove the property later (re-enable indexing):

ALTER DATABASE my_catalog.my_schema SET DBPROPERTIES ('iomete.governance.index' = 'true');

Then add the exclusion rule:

{

"exclusion_rules": {

"schemas": {

"filter_by_properties": {

"iomete.governance.index": "false"

}

}

}

}

Excluding Tables

Tables work the same way as namespaces — set a property on the table, then define the matching rule.

ALTER TABLE my_catalog.my_schema.my_table SET TBLPROPERTIES ('iomete.governance.index' = 'false');

To verify the property:

SHOW TBLPROPERTIES my_catalog.my_schema.my_table;

To remove the property later:

ALTER TABLE my_catalog.my_schema.my_table UNSET TBLPROPERTIES ('iomete.governance.index');

Then add the exclusion rule:

{

"exclusion_rules": {

"tables": {

"filter_by_properties": {

"iomete.governance.index": "false"

}

}

}

}

Multiple properties (OR logic)

When multiple properties are defined in a table exclusion rule, a table is excluded if any one of the properties matches:

{

"exclusion_rules": {

"tables": {

"filter_by_properties": {

"iomete.governance.index": "false",

"hidden": "true"

}

}

}

}

Here, a table is excluded if iomete.governance.index is false or hidden is true. It does not need to match both.

Full Example

A complete configuration using all exclusion levels:

{

"exclusion_rules": {

"catalogs": {

"names": ["sandbox_dev", "legacy_warehouse", "user_personal_spaces"],

"filter_by_properties": {

"iomete.governance.index": "false"

}

},

"schemas": {

"filter_by_properties": {

"iomete.governance.index": "false"

}

},

"tables": {

"filter_by_properties": {

"iomete.governance.index": "false",

"hidden": "true"

}

},

"default_rule": {

"filter_by_properties": {

"iomete.governance.index": "false"

}

}

}

}