Cloudera Alternatives

Cloudera was founded in 2008 by some of the brightest minds at Silicon Valley's leading companies, including Google, Yahoo!, Oracle, and Facebook. And in 2011, 24 engineers from the original Hadoop team at Yahoo! spun out to form Hortonworks. It’s been 15 years since Cloudera was founded today it is owned by private equity.

Hadoop’s origins

Hadoop's origins can be traced back to 2006 when Doug Cutting and Mike Cafarella at Yahoo! released the project as open-source software. Inspired by Google's GFS and MapReduce papers, Hadoop aimed to provide a scalable and cost-effective way to store and process large amounts of data.

Cloudera's early contributions

- Cloudera Distribution of Hadoop (CDH): Cloudera released CDH, a fully packaged and supported Hadoop distribution that simplified installation and management for enterprises.

- Cloudera Manager: Cloudera developed Cloudera Manager, a centralized management tool for monitoring and administering Hadoop clusters.

- Cloudera Impala: Cloudera introduced Impala, a high-performance SQL query engine that enabled interactive analysis of data stored in HDFS.

Cloudera's Impact on Hadoop Adoption

Cloudera played a pivotal role in popularizing Hadoop and making it a mainstream enterprise data platform. The company's commercial distributions, tools, and expertise helped organizations overcome the complexities of Hadoop adoption and realize its potential for big data analytics.

Cloudera's contributions to the Hadoop ecosystem extended beyond software development. The company actively participated in the Apache Hadoop project, leading and co-leading the development of key features and components. Cloudera also fostered a vibrant community of Hadoop users and contributors through conferences, training programs, and open-source initiatives.

Cloudera's Continued Leadership

Over the years, Cloudera has maintained its position as a leading provider of Hadoop solutions. The company has expanded its product portfolio to include a broader range of data management, analytics, and machine learning tools, all built on top of the Hadoop core.

Cloudera has also played a crucial role in the evolution of Hadoop, embracing advancements such as YARN, Spark, and HBase, and incorporating them into its enterprise platform. The company has also contributed to the development of cloud-based Hadoop solutions, recognizing the growing importance of cloud computing in big data deployments.

Hadoop limitations

While Hadoop was a revolutionary platform for big data processing, it has been around for nearly two decades and not without its limitations. Here are some of the key issues with Hadoop:

- Scalability: Hadoop's scalability is limited by its reliance on NameNode, a single point of failure that can bottleneck performance as data volume grows. This can make it challenging to handle massive datasets efficiently.

- Complexity: Hadoop's architecture and configuration can be complex, requiring specialized expertise to manage and maintain large clusters. This can be a barrier for organizations with limited technical resources.

- Performance: Hadoop's MapReduce programming model can be inefficient for certain types of data processing, particularly iterative or interactive workloads. More modern frameworks like Spark and Flink offer better performance for these use cases.

- Real-time Processing: Hadoop's traditional batch processing approach is not well-suited for real-time data analysis and decision-making. Technologies like Apache Kafka and Apache Storm are better suited for real-time data streams.

- Integration with Existing Systems: Integrating Hadoop with existing data sources and analytics tools can be challenging due to its unique architecture and data formats. This can hinder data integration and limit the ability to leverage existing infrastructure.

- Security: Hadoop's default security model is based on Kerberos, which can be complex to implement and manage. Additionally, Hadoop's distributed nature makes it vulnerable to certain security threats.

- Cost: While Hadoop can be cost-effective for storing and processing large amounts of data, the hardware and software costs associated with large clusters can be significant. Additionally, the expertise required to manage and maintain Hadoop clusters can add to the overall cost of ownership.

Despite these limitations, Hadoop remains a tool for big data analytics for many organizations.

Alternatives for Cloudera and Hadoop

- IOMETE

IOMETE is a modern data lakehouse solution that unifies all your data - regardless of where your data resides - enabling large-scale analytics (BI/ML/AI) on your entire data set. IOMETE runs on Apache Iceberg and Apache Spark. IOMETE makes it possible to analyze petabyte size across on-premise data centers and cloud environments.

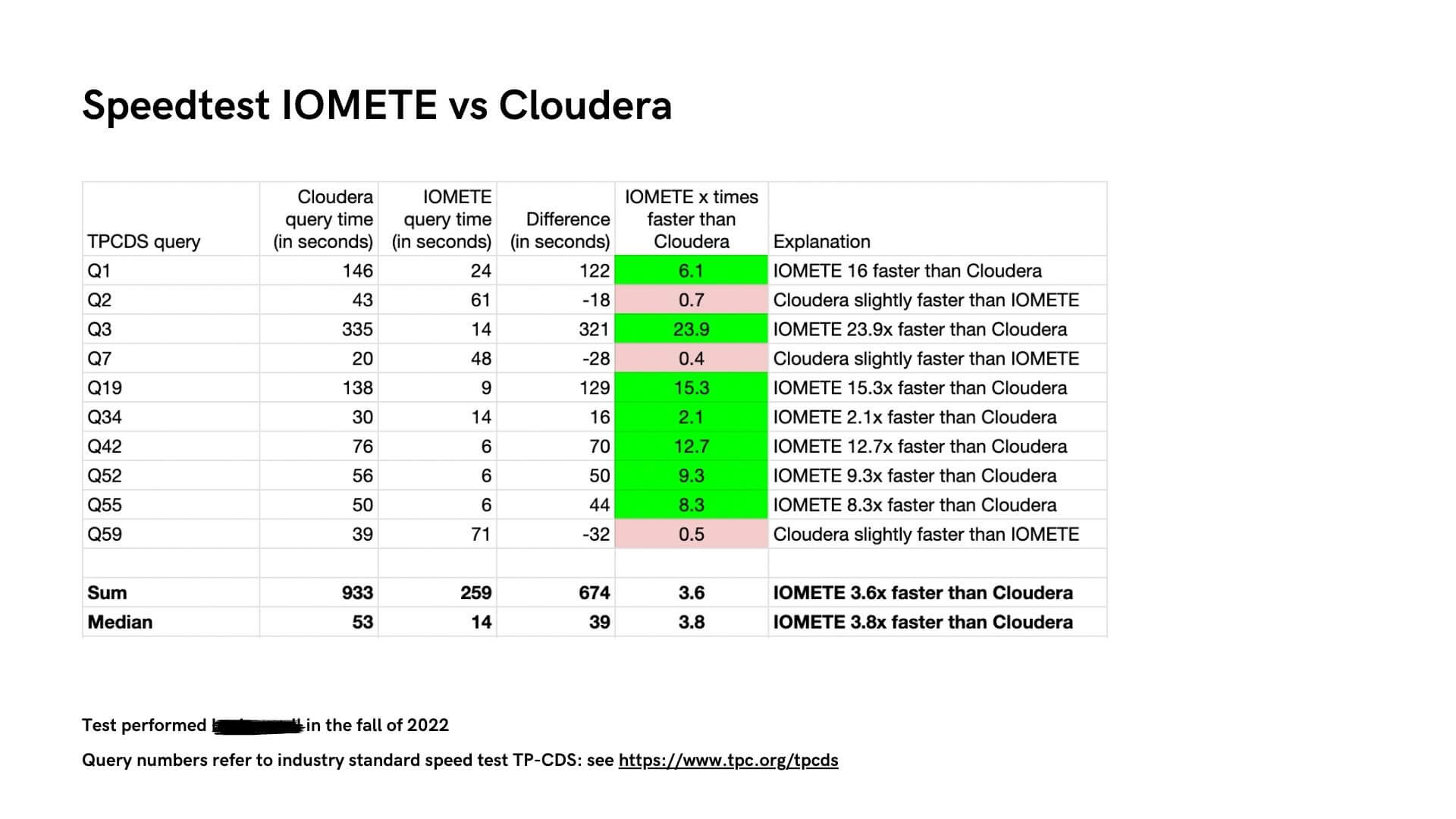

The performance improvement that this organization observed might are not a guarantee that the same will happen in other environments. IOMETE encourages testing in your own environment, with your own cloud or hardware setup, to allow for a real comparison. If you “Book a Demo” we can help you to test IOMETE in your environment and use cases.

- Kubernetes for Compute

For compute-intensive workloads, big data frameworks like Apache Flink and Apache Spark are now offering excellent support for Kubernetes. Kubernetes can replace YARN for many more minor use cases, providing a flexible, cost-effective alternative.

- Open Source S3 Implementations

To replace HDFS for storage, consider open-source S3-compatible solutions like MinIO or Ceph. These services can be orchestrated by Kubernetes, making them a seamless choice. Hadoop libraries can access S3 transparently, simplifying the transition.

- Building Your Own Distribution

While Cloudera's Ambari offered convenience, building your own distribution is a viable alternative. This approach grants you complete control over configurations and upgrades. You can use tools like Ansible to set up standard Apache projects and even build customized distributions if necessary.

- Cloud Vendor Distributions

For smaller companies, leveraging cloud vendor distributions like AWS EMR and GCP Dataproc can be the simplest option. These managed services take care of infrastructure, allowing you to focus on your data workloads. Solutions like Snowflake and BigQuery can also replace Hadoop for various use cases with minimal hassle.

- Bigtop

Bigtop is an open-source project that aims to create a comprehensive packaging, testing, and configuration ecosystem for big data applications. It can be a suitable alternative to Cloudera's offerings, providing flexibility and customization options.

- Databricks

Provides a unified data analytics platform, built on Apache Spark, that facilitates collaboration between data scientists, engineers, and business analysts. Databricks offers cloud-based big data processing and machine learning solutions.

Can Databricks run on-premise?

No. Databricks run only in cloud envrironments. You can check IOMETE if you want on-prem solution.

- Snowflake

A cloud data platform that offers a data warehouse-as-a-service which supports various cloud providers. It enables data storage, processing, and analytic solutions that are faster and easier to use than traditional offerings. Snowflake also doesn't offer on-premise data lakehouse solution as they work only for cloud providers

Conclusion

Data infrastructure solutions are evolving, and organizations should evaluate their specific needs and choose the solution that best aligns with their goals and resources. We encourage you to reflect on the strategies discussed and consider how they might be applied within your own data engineering practices to overcome the challenges of big data. If you want to discuss more. Remember, the goal is not just to manage data but to unlock its value for strategic advantage.